We like to describe the Crazyflie as a versatile open source flying development platform. It is something that enables you to do cool stuff. It is not a finished, polished end product in its own.

The platform offers hardware extensions in form of decks, that you make your self or buy from Bitcraze. And the platform offers software extension, either by altering the firmware on the device or by writing programs that communicate with the platform.

This approach makes determining the expectations and requirements for the platform and the surrounding ecosystem a bit tricky. It is dependent on what you as a user plan to create using our products. And since the ecosystem is growing we need a, scalable, way to handle these fuzzy expectations during development and maintenance.

We think testing is big part of solving this, testing in a systematic, scalable and reproducible way. This is the reason for setting up our first physical test lab, aimed at providing stability while moving forward with new products and features.

Testing today

Continuous integration

On each change proposal to our software we run tests. For the firmware in the device we build multiple different configuration and run unit-tests. For the Crazyflie client and the Python library we make sure we can build for Linux and Windows and that the code passes our style guidelines. If any test fail we go back and update the proposed changed and re-run the tests. This is our first level of defense against defects.

Release testing

For each release we follow a checklist of procedures and tests to make sure that quality does not degrade. We make sure all the examples in the Python library are working and that the Crazyflie can fly around as expected in our flight arena, using various positioning systems.

Limitations

The way we test today makes it very difficult to determine if we regress in, for example, flight capability or in radio communication quality. We either test different software packages in isolation, without hardware in the loop, or we test by having a human trying to estimate if any degradation has happened since the last release.

We run into scalability issues as our ecosystem grows, it is near impossible to test all the different combinations of products. And it is very hard for us to detect stability or quality regressions without having a more systematic approach to testing the software on relevant hardware.

Introducing the test lab

What we have done so far are two things:

- Created an infrastructure for setting up a site for testing our software on real devices

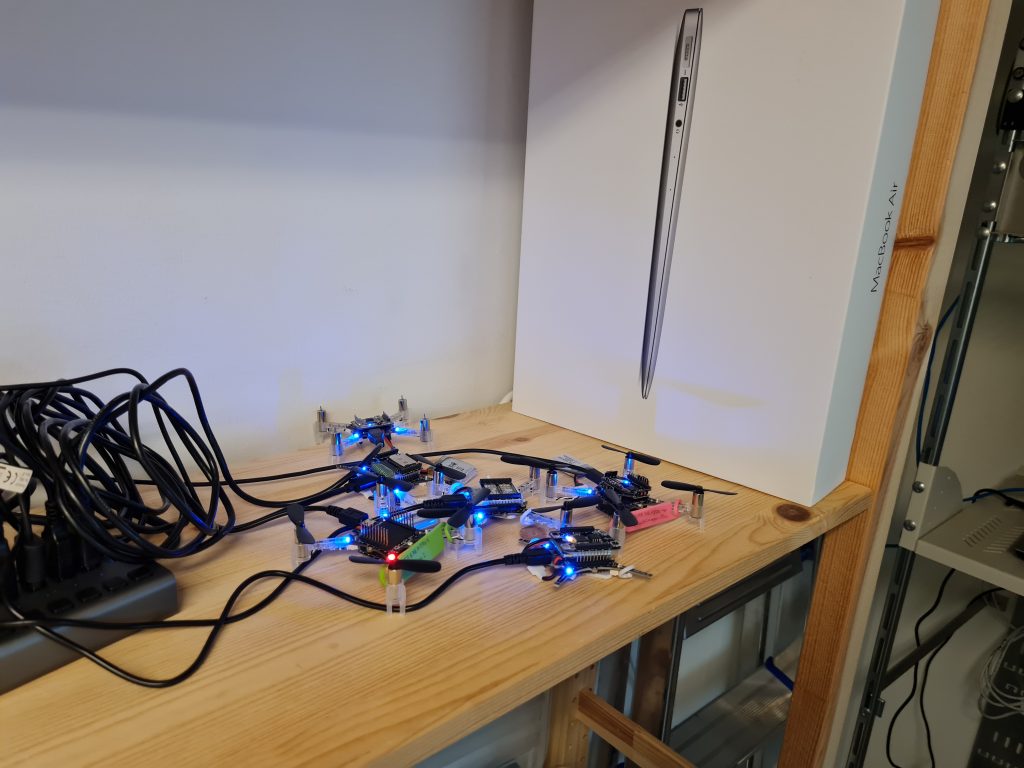

- Prepared a test lab in our printer room at our office in Malmö

Infrastructure: crazyflie-testing

This new Git repository contains the building blocks we need to setup our new test lab. You can check out the repository README.md file for information on how to run it.

It contains a beginning of an automated QA test suite and along with that a start of a set of requirements for those tests. The requirements are specified in TOML files which are parsed and accessed from the tests. They are also rendered to markdown, to be easier for human consumption.

The repository contains a way to specify a test site, which is a collection of devices to run the test suite against. You specify your site in a TOML file which contain information about each device, such as which decks are connected and the radio:// URI. This site specification is then used by the test framework when running the tests, making sure each test is run against all devices compatible with that test.

The infrastructure also has management scripts to perform tasks like flashing all devices in a site, or recovering them if they go into boot loader mode by accident. All aimed at being able to handle testing with the least amount of human intervention possible.

The most recent addition to the infrastructure is the ability to test swarms using the Crazyswarm project!

Crazylab Malmö

We define the site crazylab-malmö.toml which is the test lab we have setup in our printer room in Malmö, Sweden, it is currently defined as:

version = 1

[device.cf2_flow2_lighthouse]

radio = "radio://0/50/2M/E7E7E71706"

decks = ["bcFlow2", "bcLighthouse4"]

bootloader_radio = "radio://0/0/2M/B19CF77F05?safelink=0"

[device.cf2_flow2]

radio = "radio://0/50/2M/E7E7E71705"

decks = ["bcFlow2"]

bootloader_radio = "radio://0/0/2M/B16298A8A3?safelink=0"

[device.cf2_flow2_multiranger]

radio = "radio://0/50/2M/E7E7E71704"

decks = ["bcFlow2", "bcMultiranger"]

bootloader_radio = "radio://0/0/2M/B1CEE678C5?safelink=0"

[device.cf2_usddeck]

radio = "radio://0/50/2M/E7E7E71703"

decks = ["bcUSD"]

bootloader_radio = "radio://0/0/2M/B164C8AC96?safelink=0"

[device.cf2_lighthouse]

radio = "radio://0/50/2M/E7E7E71702"

bootloader_radio = "radio://0/0/2M/B1F519F77B?safelink=0"

decks = ["bcLighthouse4"]

[device.cf2_stock]

radio = "radio://0/50/2M/E7E7E71701"

bootloader_radio = "radio://0/0/2M/B177790F3A?safelink=0"Code language: JavaScript (javascript)There are, for now, six devices with different combination of decks attached. Each night, after midnight, different GitHub actions starts working for us. One job builds the latest versions of the firmwares for the devices another job will upgrade all the devices in the lab and run the test suite, and a selection of the Python library examples. We will be notified if any of these jobs fail.

This acts as a safety net for us. We will quickly know if the communication performance degrades, or if we have messed up with our parameter or logging frameworks. And we can catch silly firmware bugs as early as possible.

Future

We want to keep adding test cases and other infrastructure to our testing framework. Going forward it would be really nice to be able to test positioning systems in some way. And of course, some type of test of flight, be it free flying tests or using some kind of harness.

It might be interesting to look into adding simulations (hardware in loop or not) to our testing setup. It is all a question of bandwidth, there are a lot of cool things to work on, and a limit on time and bodies.

You can help! You might even be able to help yourself while helping us! If you contribute tests that correspond to your use-case, then you can relax knowing that those tests will run each night, and that Bitcraze engineers will be notified the minute they fail.

Or you can define your own site and run the test-suite against all your devices to make sure nothing strange is going on.

Hopefully this infrastructure and lab will help all of us to do more cool stuff using the Bitcraze ecosystem!