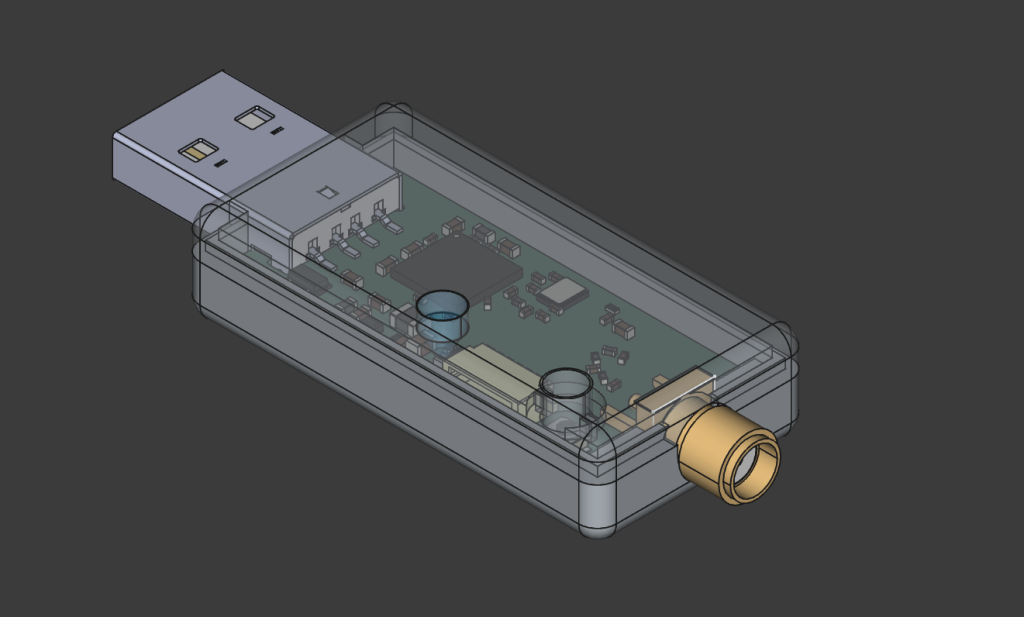

Lately, at home and at work during my Fun Fridays, I have been trying to learn more about 3D CAD and more precisely about FreeCAD, mostly in the context of (ab)using our 3D printers :). Inspired by a couple of Crazyradio cases that have already been published, I started working on a Crazyradio 2.0 case since this has not yet been done, I am quite happy about the result:

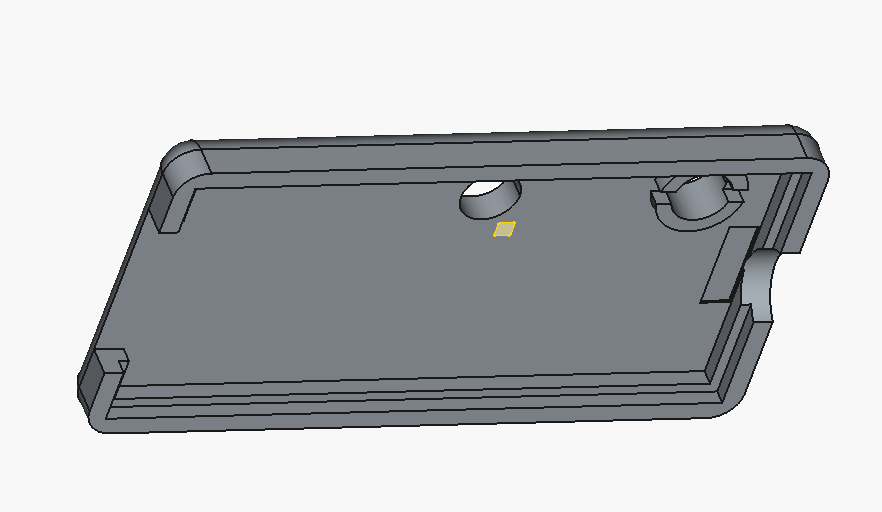

The design is mostly press-fit: the top and bottom parts are pressed together and hold thanks to the 3D printed layers interlocking in each-other. The LED lens is pressed in the top and the button actually slides and is guided by the top. The button is flush with the case since it is mainly a bootloader button and is not required to be pressed during normal use.

ECAD/CAD design

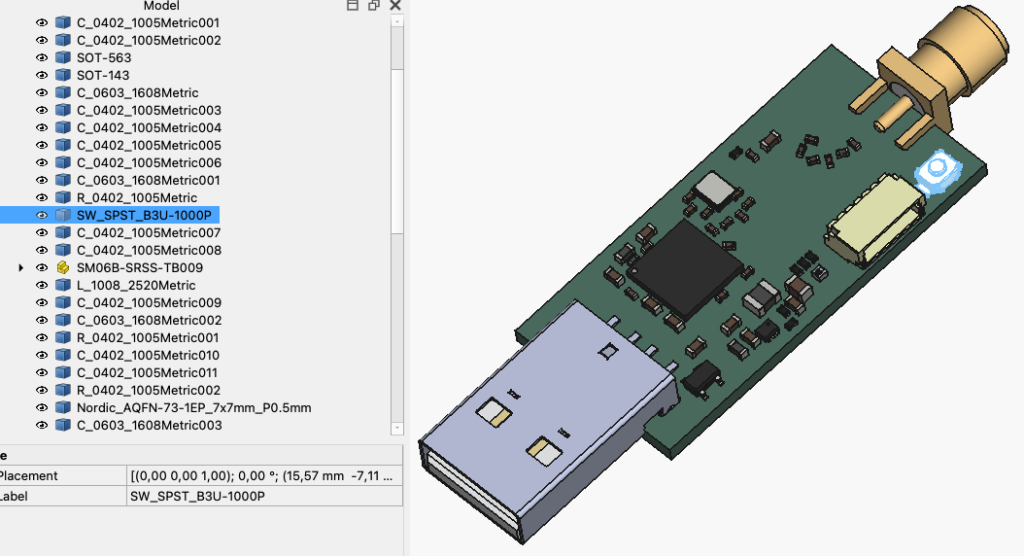

One of my goal when starting with this project was to experiment working both with Electronic CAD (KiCAD in my case) and Mechanical CAD (FreeCAD). There is an extension for FreeCAD that allows to go back-and-forth between the two tools, but in this case it was much simpler since my board was already finished, so I only needed to get a model of it in FreeCAD.

To do so, I made sure all the important components had 3D models in the Crazyradio electronic design. I had to import a couple of models from Mouser, and had to re-create the RGB LED in FreeCAD. I then exported it as a STEP file. This file can be imported in FreeCAD and retain all the interesting shape and surfaces useful to work with the model:

Shape binder: Keeping it DRY

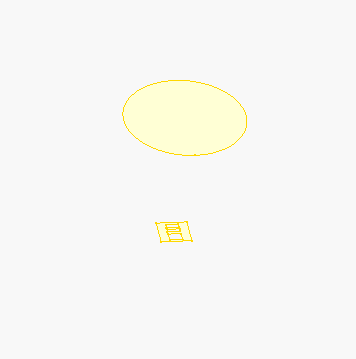

Coming from the software/electronic world, we have this notion of DRY: Do not Repeat Yourself. Ideally I would like to apply the same to mechanical design and avoid as much as possible to write any measurement by hand. one way to do that with FreeCAD is with Shape binder. A good example of its use is with the LED lens.

I wanted to put a translucent lens just on top of the Crazyradio LED. One way to achieve that is to create a Shape binder of the LED top surface onto the TOP and Lens. The LED top is the yellow square in the next picture and its presence allows to align perfectly the hole in the top cover to the middle of the LED on the PCB. This prevent all hazardous manual measurement when placing the hole.

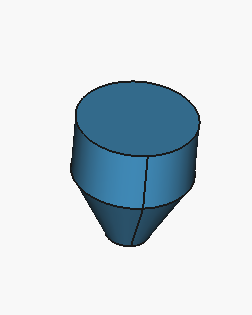

For the lens design I can go one step further, I can create a shape binder both for the LED and for the hole in the top layer, this way the shape of the lens is derived from existing geometry and, to a large extent, does not have to be specified manually:

This allows to quite easily align the lens perfectly on top of the LED. The same principle is used for the button to get it to slide and press on the PCB switch with minimal play.

Final product

I pushed the current state of the case on GitHub. It is also available on Maker World. I plan on improving the design before deciding to name it 1.0 and to eventually upload it on Printables and Thingiverse.

If you want to learn more about FreeCAD, I can recommend this great video series on YouTube, it goes through a lot of very useful functionalities like the shape binders.