This week, we have a guest blog post from Scott at Droneblocks.

DroneBlocks is a cutting-edge platform that has transformed how educators worldwide enrich STEM programming in their classrooms. As pioneers in the EdTech space, DroneBlocks wrote the playbook on integrating drone technology into STEM curriculum for elementary, middle, and high schools, offering unparalleled resources for teaching everything from computer science to creative arts. What started as free block coding software and video tutorials has become a comprehensive suite of drone and robotics educational solutions. The Block-Coding software still remains free to all, as the DroneBlocks mission has always been to empower educators and students, allowing them to explore and lead the way. This open-source attitude set DroneBlocks on a mission to find the world’s best and most accessible micro-drone for education, and they found it in Sweden!

Previously, DroneBlocks had worked alongside drone juggernaut DJI and their Tello Drone. The Tello was a great tool for its time, but when DJI decided to discontinue it with little input from its partners and users, it made the break much easier. The hunt began for a DJI Tello replacement and an upgrade!

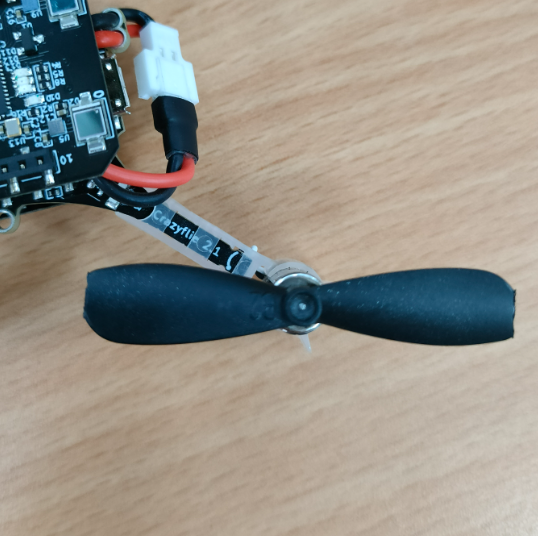

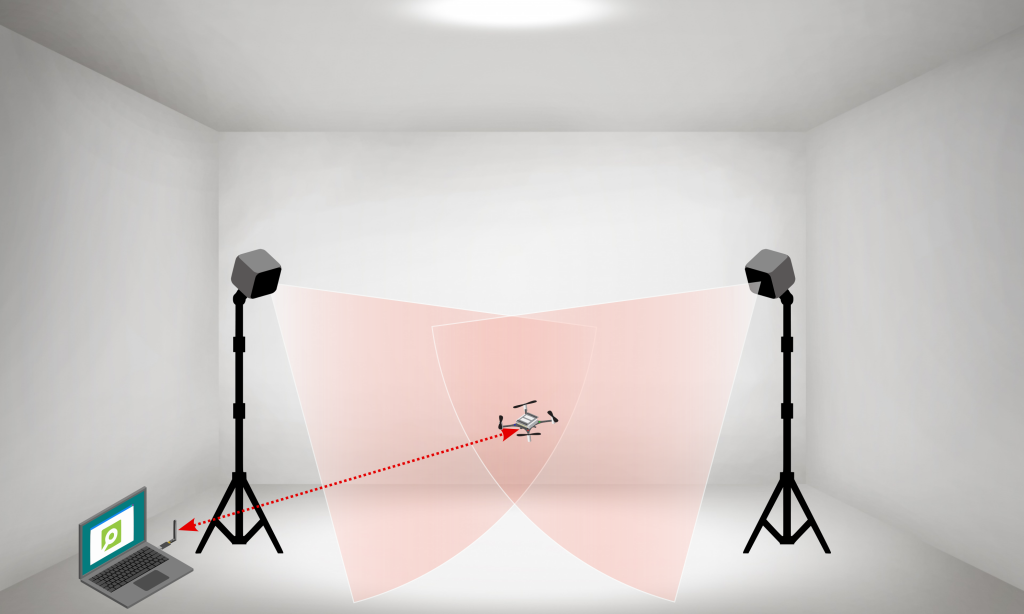

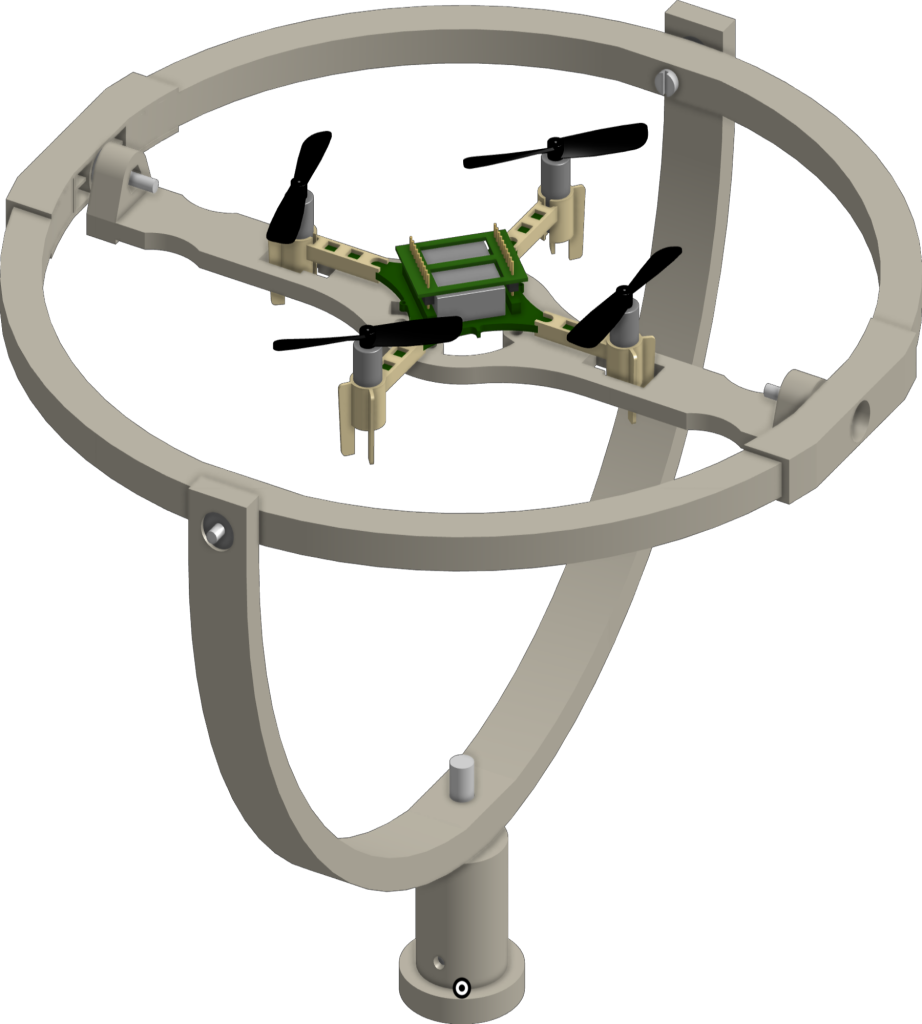

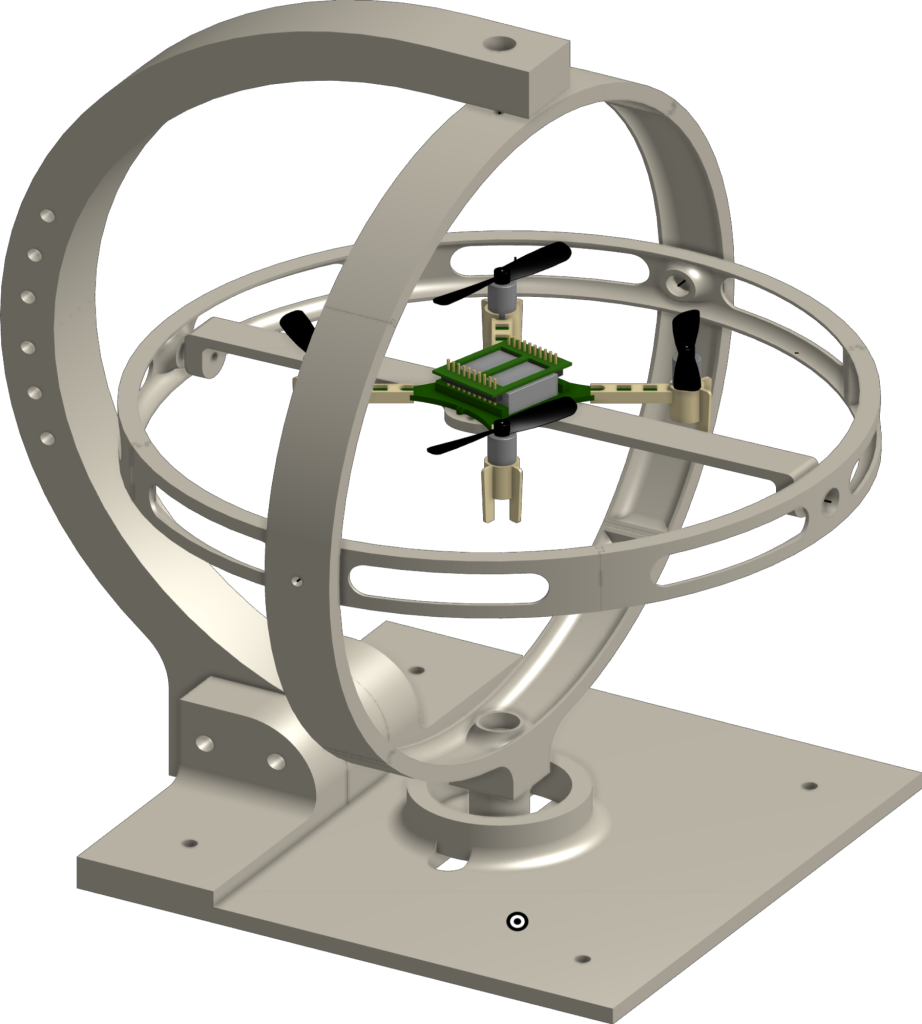

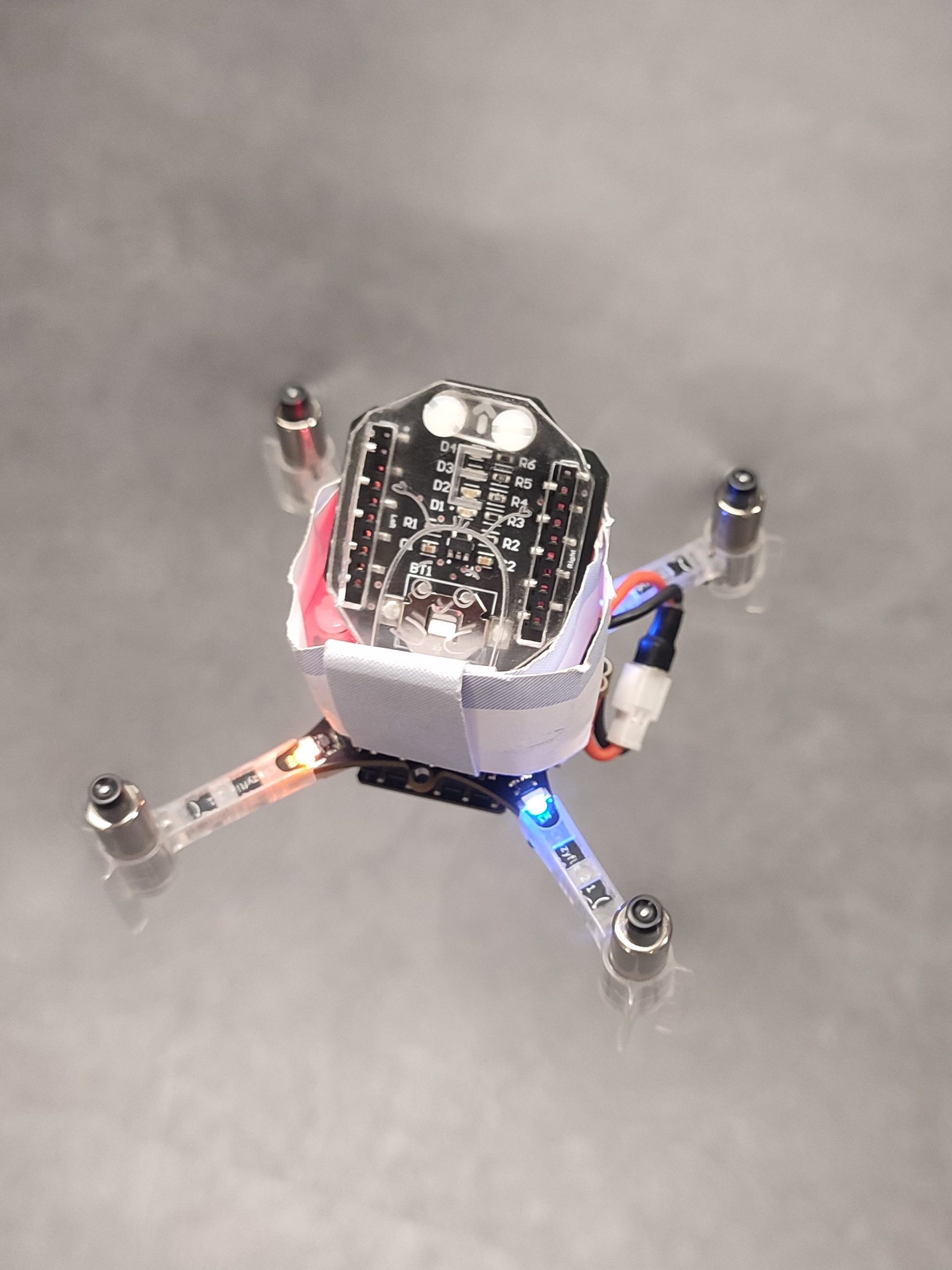

Bitcraze’s choice to build Crazyflie as an open platform had their drone buzzing wherever there was curiosity. The Crazyflie was developed to fly indoors, swarm, and be mechanically simplistic. DroneBlocks established that the ideal classroom micro-drone required similar characteristics. This micro-drone needed to be small for safety but sturdy for durability. It also needed to be easy to assemble and simple in structure for students new to drones. Most importantly, the ideal drone needed to have an open line of software communication to be fully programmable. Finally, there had to be an opportunity for a long-lasting partnership with the drone manufacturer, including government compliance.

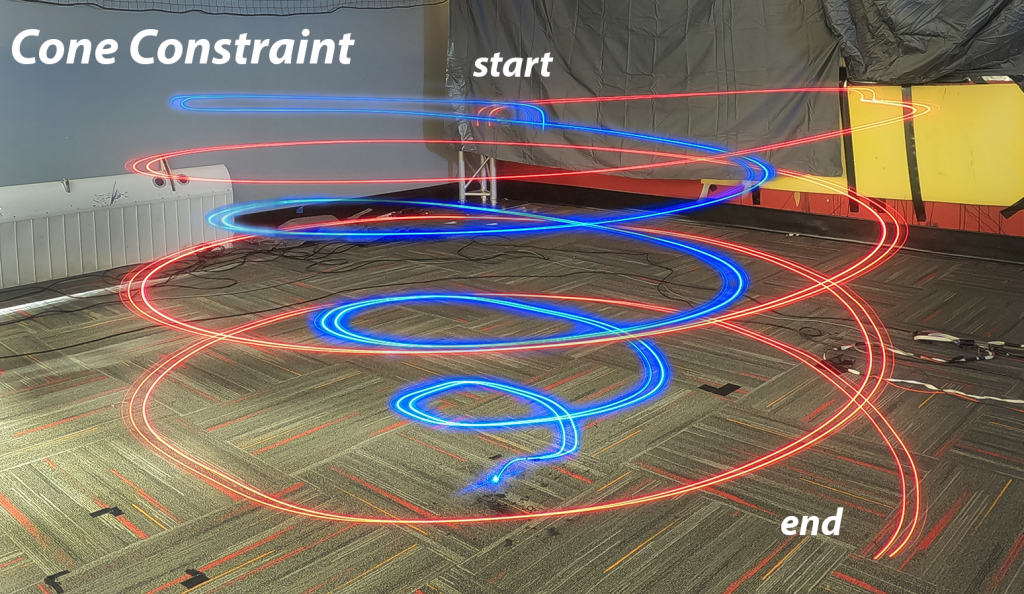

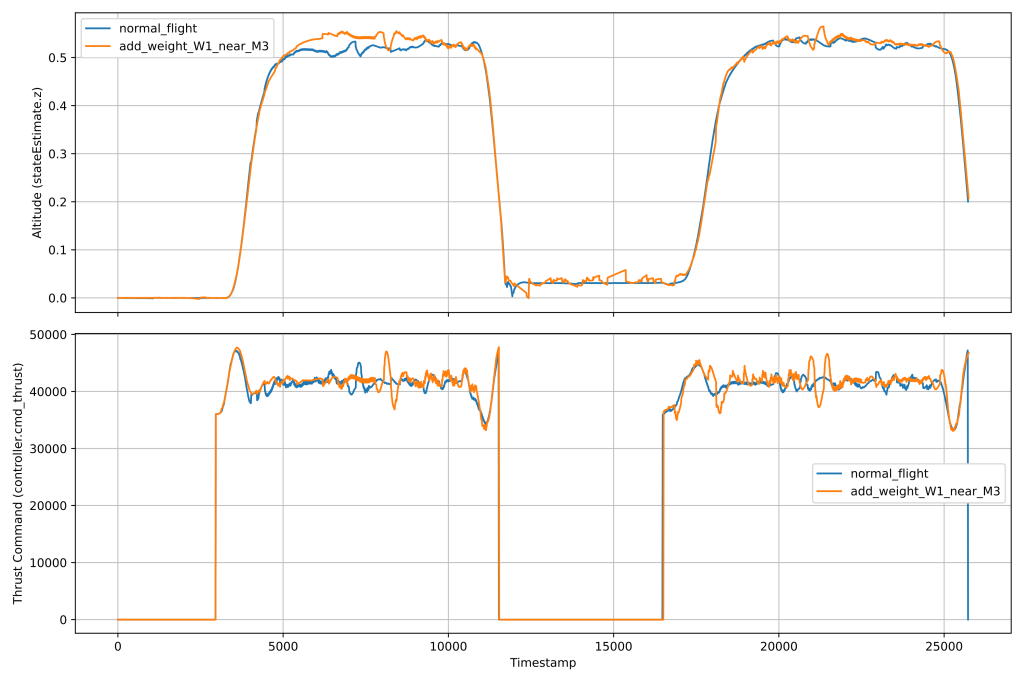

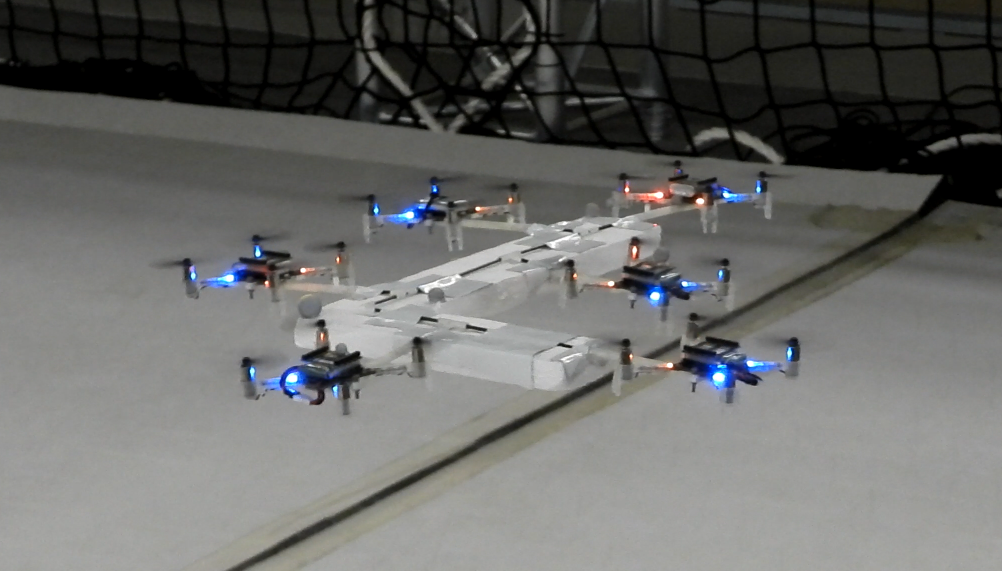

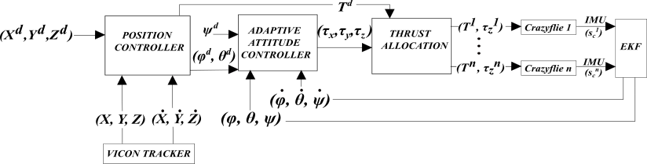

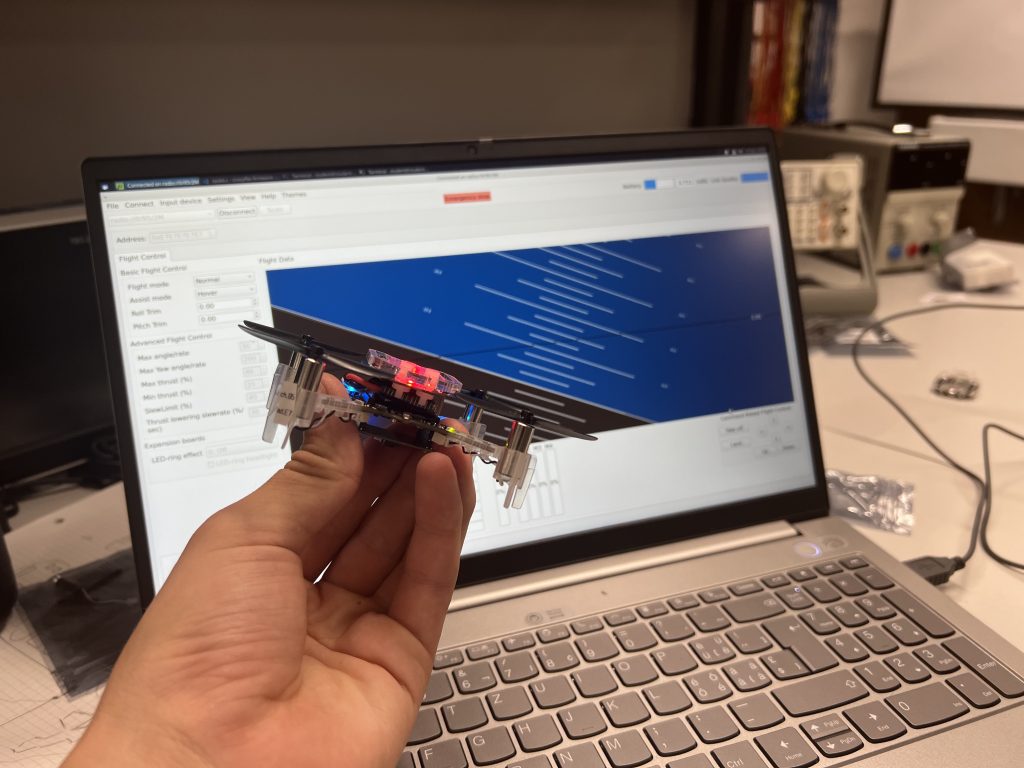

After extensive searching and testing by DroneBlocks, the Crazyflie was a diamond in the rough – bite-sized and lightweight, supremely agile and accurate, reliable and robust, and most importantly, it was an open-source development platform. The DroneBlocks development team took the Crazyflie for a spin (or several) and with excitement, it was shared with the larger curriculum team to be mined for learning potential. It was promising to see Crazyflie’s involvement in university-level research studies, which proved it meant business. DroneBlocks knew the Crazyflie had a lot going for it – on its own. The team imagined how, when paired with DroneBlocks’ Block Coding software, Flight Simulator, and Curriculum Specialists, the Crazyflie could soar to atmospheric heights!

Hardware? Check. Software? Check. But what about compatibility? DroneBlocks was immediately drawn to the open communication and ease of conversation with the Bitcraze team. It was obvious that both Bitcraze and DroneBlocks were born from a common thread and shared a mutual goal: to empower people to explore, investigate, innovate, research, and educate.

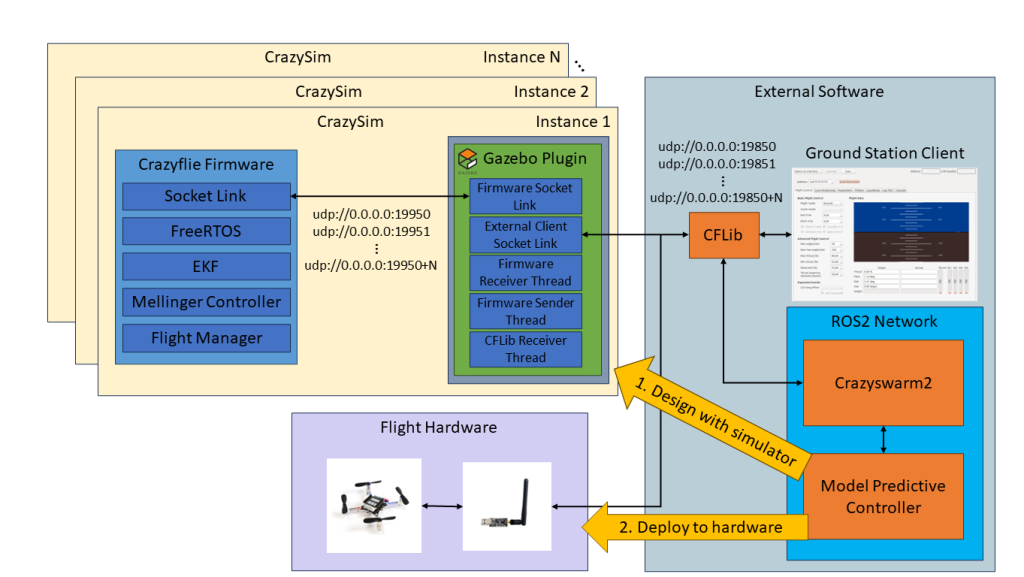

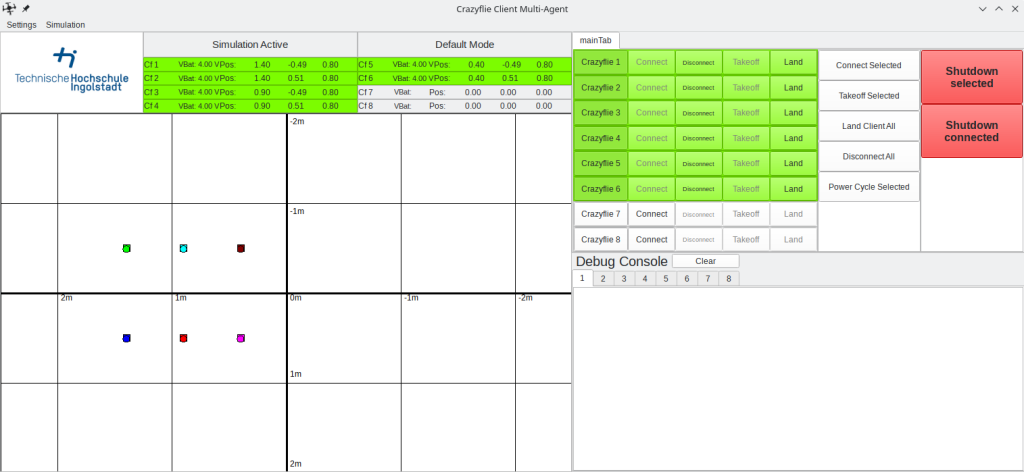

DroneBlocks has since built a new Block Coding interface around the Crazyflie, allowing students to pilot their new drone autonomously and learn the basics of piloting and coding concepts. This interface is offered with a brand new drone coding simulator environment so students can test their code and fly the Crazyflie in a virtual classroom environment.

The Crazyflie curriculum currently consists of courses covering building, configuring, and finally, programming your drone with block coding (DroneBlocks) and Python. DroneBlocks’ expert curriculum team designed these courses to enable learners of all ages and levels to learn step by step through video series and exercises. New courses around block coding and Python are in constant development and will be continuously added to the DroneBlocks curriculum platform.

Crazyflie Drones now headline DroneBlocks’ premiere classroom launch kit. The DroneBlocks Autonomous Drones Level II kit encompasses everything a middle or high school would need to launch a STEM drone program, including the hardware, necessary accessories, and safety wear paired with the DroneBlocks software and curriculum. As a result, thousands of new students have entered the world of Drones and programming thanks to the Bitcraze + DroneBlocks partnership.

DroneBlocks has become an all-inclusive drone education partner for engaging and innovative learning experiences—and the Crazyflie delivers this by being a cutting-edge piece of hardware in a clever package.