Human Robot Interaction (HRI) is a conference that brings together academics and industry partners to explore how humans are interacting with the latest developments in robotics. The conference is held yearly and brings together the many relevant disciplines concerned with the “H” part (cognitive science, neuroscience), the “R” part (computer science, engineering) and the I part (social psychology, education, anthropology and most recently, design).

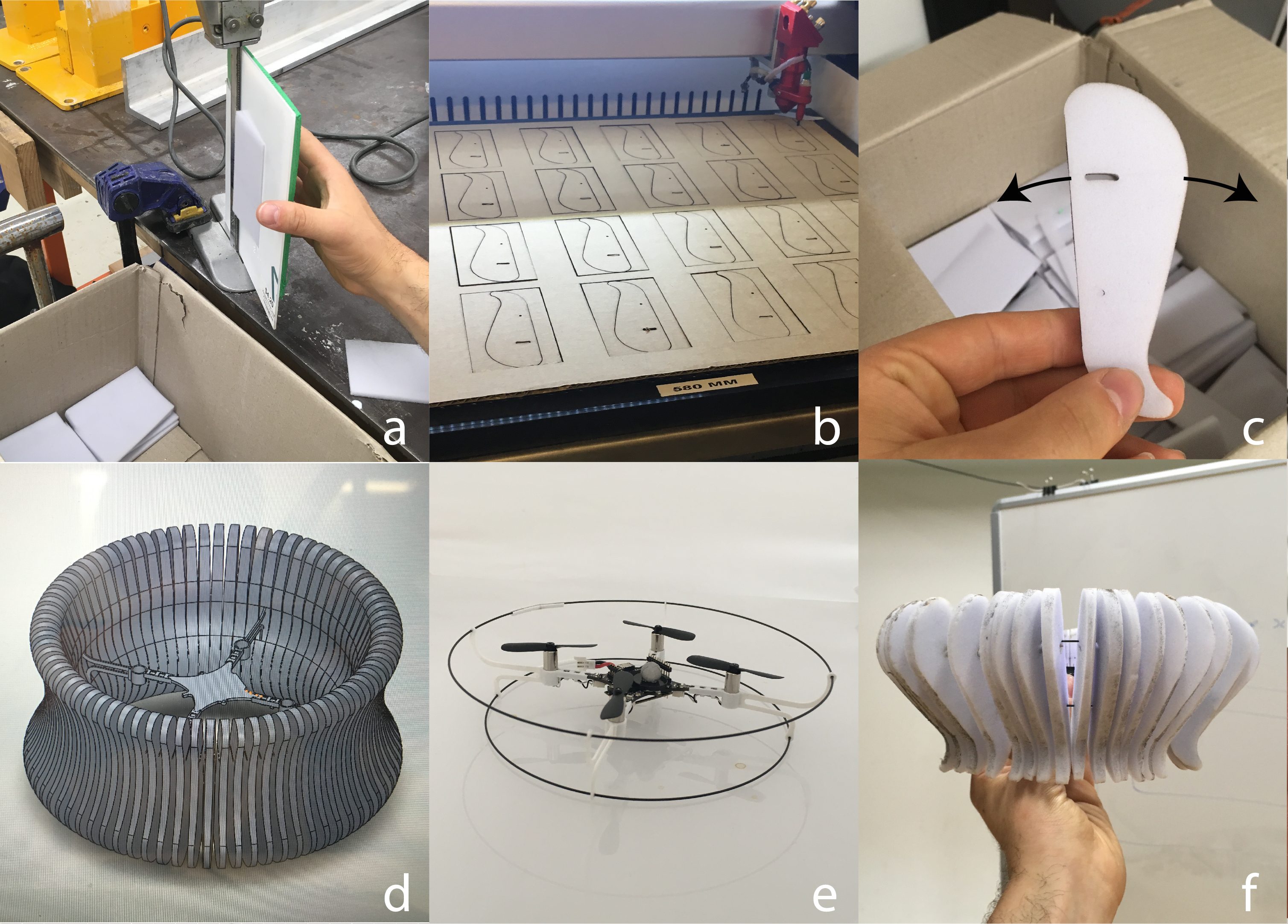

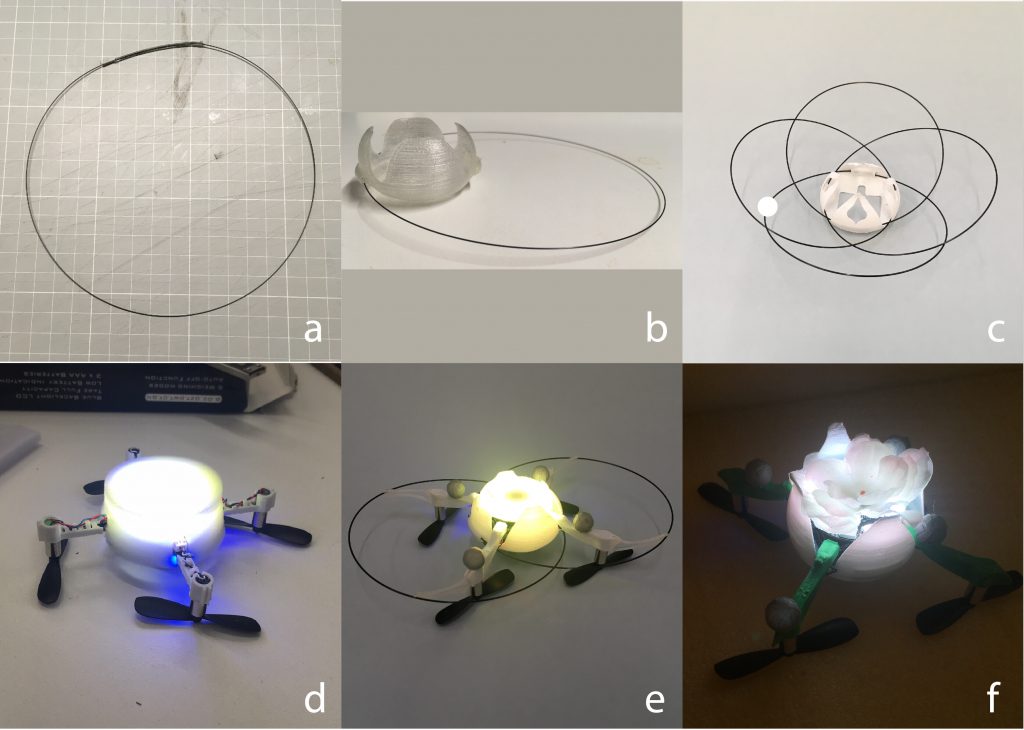

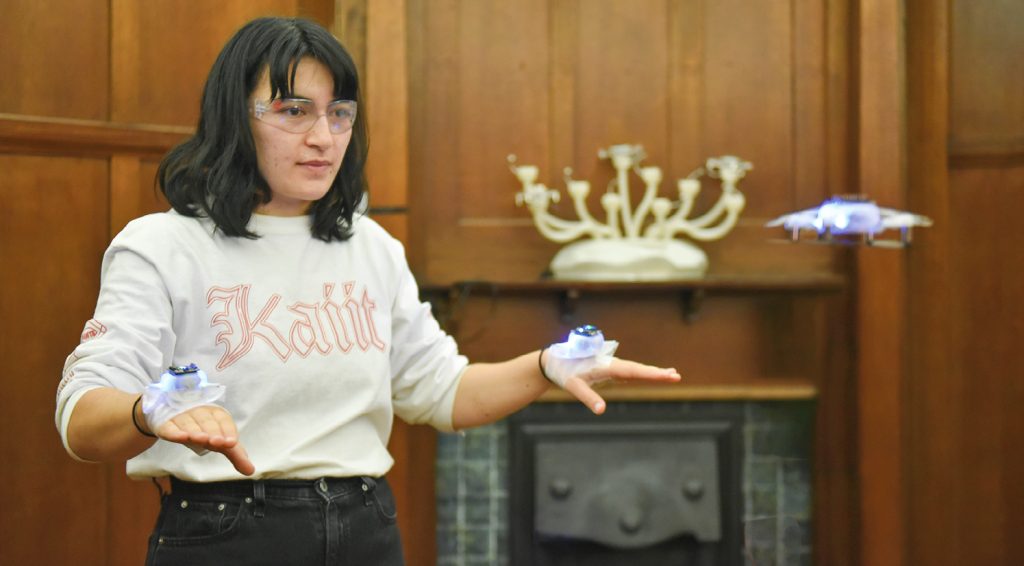

This year it was in Melbourne (my home city) and I was so grateful to be given the chance to demonstrate a system from my PhD studies called “How To Train Your Drone” in what was its final hurrah, a retirement party! Running the demo was a pleasure, especially with the supportive and curious HRI crowd at such a well organised event .

The take home message from this demonstration was this:

If you let the end user shape, with their hands, the sensory field of the drone, they then end up with an in-depth understanding of it. This allows the user to creatively explore how the drone relates to themselves and their surrounding environment.

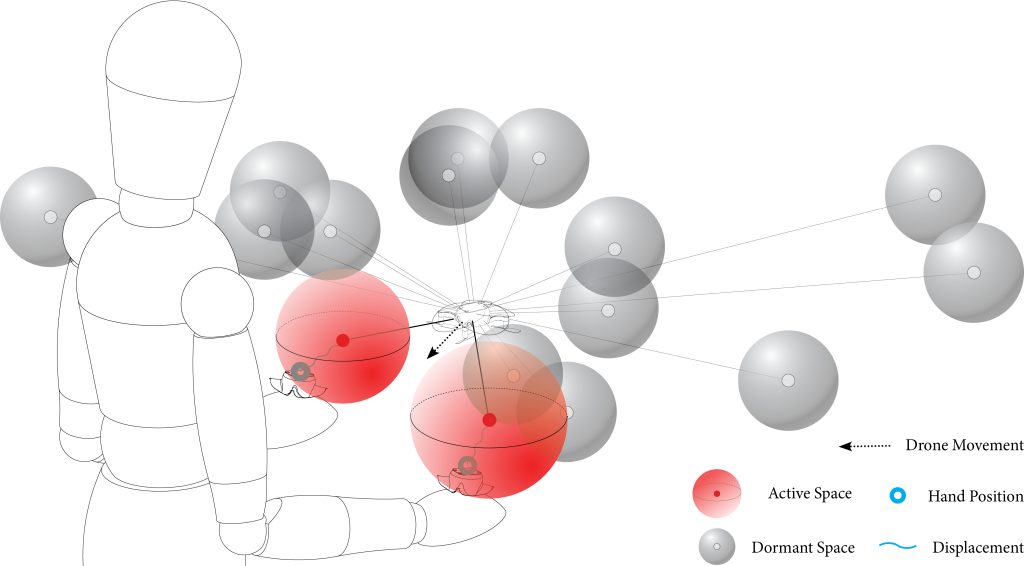

What do we mean by sensory field? Its the area around the drone where it can “feel” the presence of those hand-mounted sensors, represented by the grey and red spheres in the figure below. Initially, the drone has no spheres and therefore cannot respond at all to the user’s movement. But by holding the hands still for a few seconds the user can create a spherical space, relative to the drone where the drone can sense their hands and follow them.

These spheres are “part of the drone’s body”, and so they move with the drone. So in a way you are kind of deciding where the drone can “feel” whilst also piloting it. Should it be sensitive in the space immediately in front of it? Or either side of it?

But shouldn’t it just be everywhere?

Good question! We think the answer is no, and for two reasons:

- What we can and cannot sense as humans is what makes us human. It also allows us to understand other humans. E.g. We don’t deliver verbal information directly into other people’s ears at max volume because we have ears and we know that sucks. Nor do we demonstrate how to perform a task to someone with their back turned to us. So by the same token, knowing how a machine senses the world also teaches us how to communicate with it. Furthermore, shaping how a machine can sense the world allows us to creatively explore what we can do with it.

- To quote science writer Ed Yong, “Nothing can sense everything and nothing needs to”. Meaning we can get the job done without having to ingest insane amounts of data and even more insane amounts of compute. By cumulatively building an agent’s capacity, in context, with end users, we could actually end up with agents that are hyper specialised and resource efficient. A big plus for resource constrained systems like the Crazyflie and our planet at large.

If you are interested in reading more about this research then please check out this paper (if you like to read) or this pictorial (if you like to look at pictures). Or just reach out in the comments below!