It’s been over a little year since we started the ROS Aerial Robotics community group together with the Drone Code Foundation, and it is still going strong (blogpost 1, blogpost 2). Since there is a nice mix of people joining the meetings from different backgrounds and drone operating systems, we have had quite a few discussions and overviews of various topics. For instance, we’ve explored courses in Aerial Robotics and other subjects in previous meetings. An important goal of the group has been to make it easier for people to get started with flying robotics, which we’ve achieved by collecting essential information in the ‘Aerial Robotic Landscape’.

Starting out in Aerial Robotics

Let’s cut to the chase: Aerial Robotics is a very challenging field to get started in. Not only do you need a comprehensive understanding of which hardware to acquire, but users also face a multiple choices. These decisions include selecting the right autopilot, simulator for testing ideas, and necessary sensors to achieve autonomy. Unlike the well-established Turtlebot in other robotics domains, there isn’t a universally accepted and field-tested getting-started development drone in the aerial robotics world. While we at Bitcraze would love everyone to go for the Crazyflie, we recognize its limitations. Like, it may not handle outdoor flights with GPS or carry heavy cameras effectively. Our goal, as the ‘Aerial Robotics Community group,’ is to make it easier for beginners by providing users with information about the hardware and software they truly need.

Drone Code Foundation and Bitcraze AB had a keynote speech together at ROSCon 2023 about getting started in Aerial Robotics called ‘Up, Up, and Away: Adventures in Aerial Robotics’. Please take a look at the talk here on Vimeo.

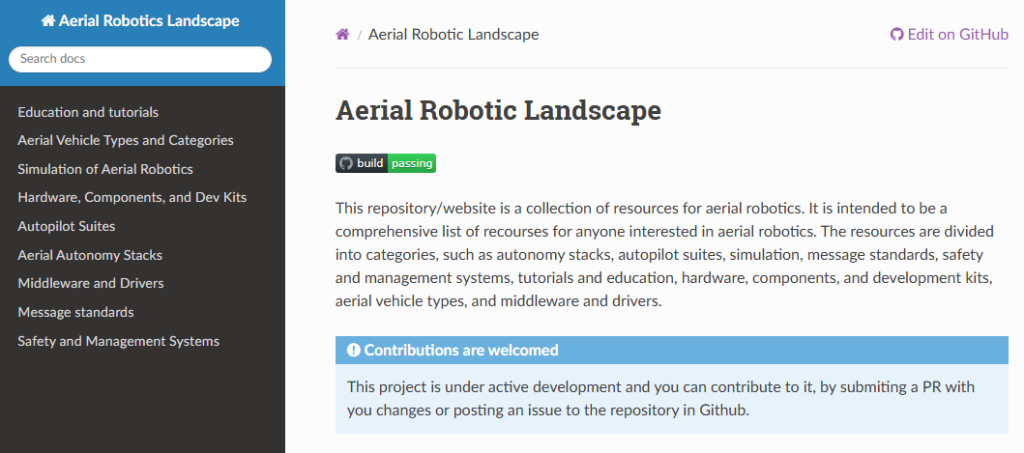

The Aerial Robotics Landscape website

The Aerial Robotics Landscape serves as a repository of information related to all things Aerial Robotics. It started out in the GitHub repository, and it grew due to the discussions held at the aerial robotics community group meetings. Additionally, contributions from both group members and external contributors have played an important role (you can explore the merged PRs).

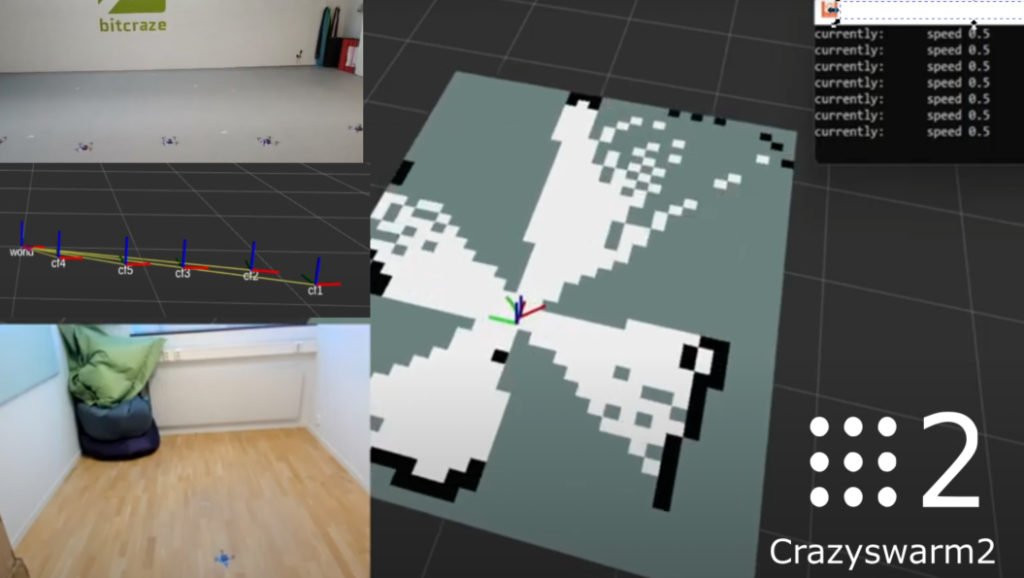

As the pages and tables expanded, it became clear that a better representation was necessary than just the mere README documentation on the GitHub repository. The group therefore experimented with MKDocs, creating a website in the ‘Read the Docs’ theme. This is a similar theme that important packages within in the ROS ecosystem use, such as the ROS documentation, as well as ROS 2 packages like Nav2 and Crazyswarm2.

Please take a look at the rendered website here: https://ros-aerial.github.io/aerial_robotic_landscape/

Please contribute!

The Aerial Robotics Landscape is a dynamic , where development kits emerge while others are discontinued, new simulators rise while some remain unsupported, and autopilot and autonomy features evolve monthly. This ever-changing landscape demands constant updates and additions. We try to do this to the best of our ability, but we can’t do it alone — we need your help.

If you believe that your favorite hardware platform is missing from the landscape, or if you’ve recently developed a new planning algorithm for fixed-wing vehicles or created a YouTube course on optical-based flight, please contribute by means of a pull request to the GitHub repository. We’ve put together a guide on how to contribute to the Aerial Robotics Landscape here. Let’s make the website useful together!

If you’d like to join the ROS aerial Robotics meetings, please take a look at our community github repository for joining information. The next meeting is the 5th of June, 4 PM UTC and was announced on ROS discourse.