For quite a while now, I have been very interested in the Rust programming language and since Jonas joined us we are two rust-enthusiast at Bitcraze. Rust is a relatively recent programming language that aims at being safe, performant and productive. It is a system programming language in the sense that it compiles to machine code with minimal runtime. It prevents a lot of bugs at compile time and it provides great mechanisms for abstraction that makes it sometime feels as high level as languages like Python.

I have been interested in applying my love for Rust at Bitcraze, mostly during fun Fridays. There is two area that I have mainly explored so far: Putting Rust in embedded systems to replace pieces of C, having such a high-level-looking language in embedded is refreshing, and re-writing the Crazyflie lib on PC in rust to make it more performant and more portable. In this blog post I will talk about the later, I keep embedded rust for a future blog post :).

Re-implementing Crazyflie lib

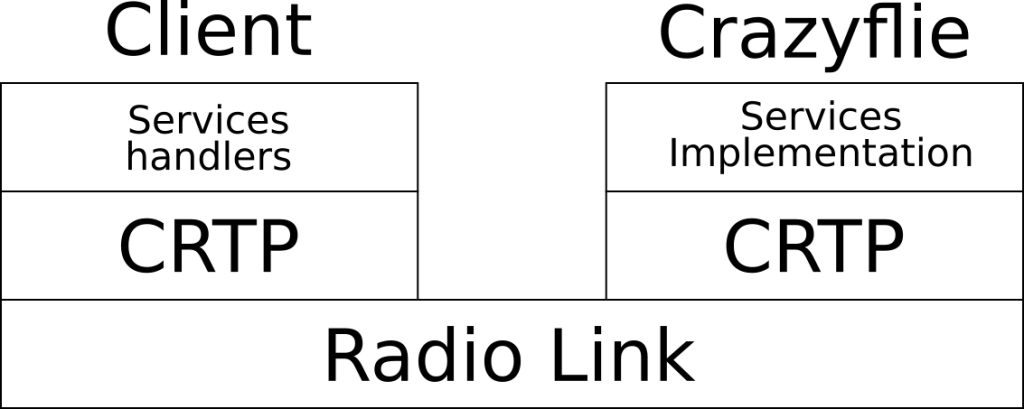

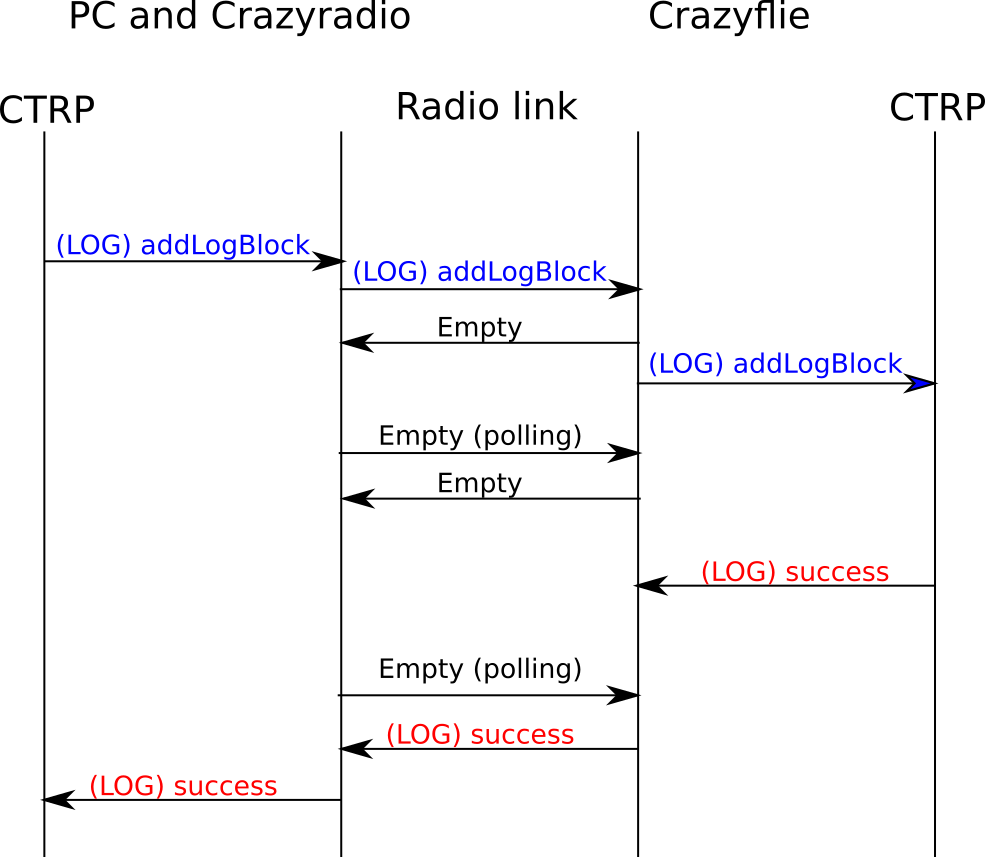

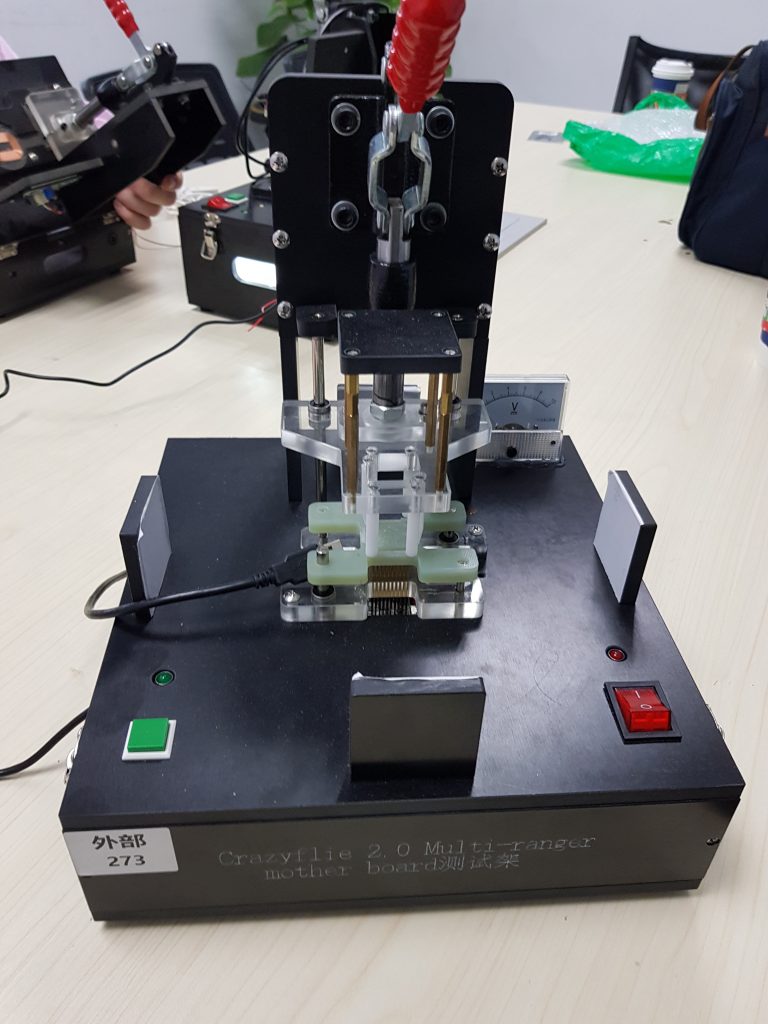

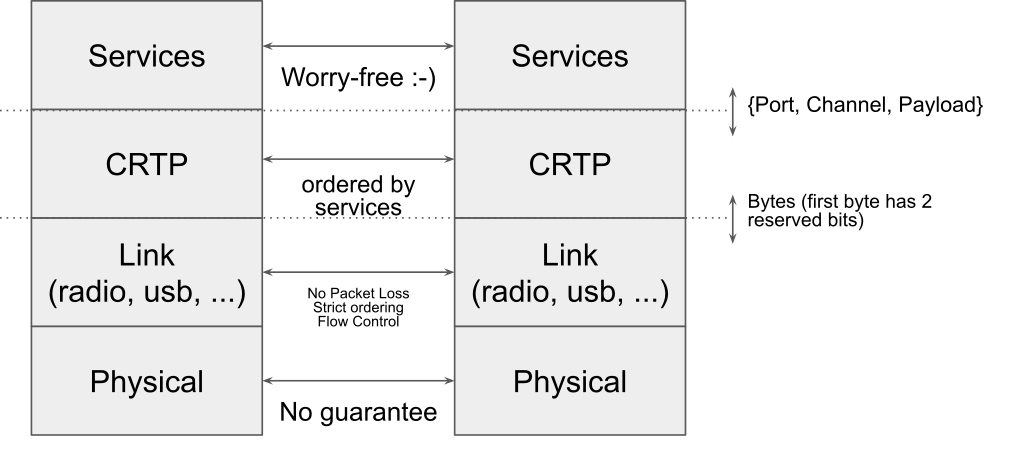

To re-implement the Crazyflie lib, the easiest it to follow the way the communication stack is currently setup, more information can be found on Crtp in a pevious blog post about the Crazyflie radio communication and the communication reliability.

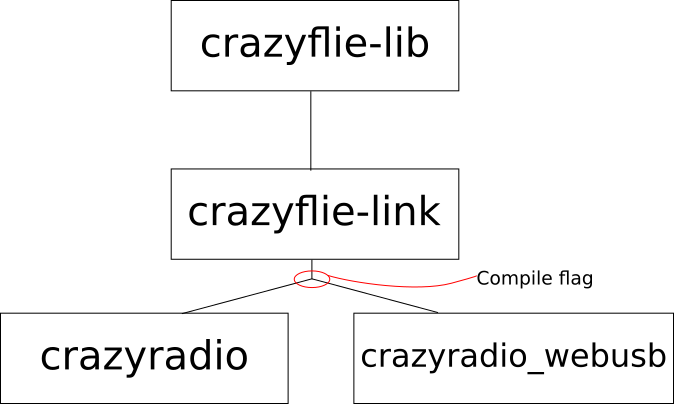

Since I am currently focusing on implementing communication using the Crazyradio dongle, I have separated the implementation in the following modules (A crate is the Rust version of a library):

- Physical: Crazyradio crate.

- Link + CRTP: Crazyflie-link crate.

- Service: Crazyflie-lib crate.

This organization is very similar to the layering that we have in the python crazyflie-lib, the difference being that in the Crazyflie lib all the layers are distributed in the same Python package.

At the time this blog post is written, the Crazyradio crate is full featured. The link is in a good shape and even has a python binding. The Crazyflie lib however is still very much work in progress. I started by implementing the ‘hard’ parts like log and param but more directly useful part like set-points (what is needed to actually fly the Crazyflie) are not implemented yet.

Compiling to the web: Wasm

One of the nice property of Rust is that compiling to different platform is generally easy and seemless. For instance, all the crates talked about previously will compile and run on Windows/Mac/Linux without any modification including the Python binding using only the standard Rust install. One of the Rust supported platform is a bit more special and interesting compared to the other though: WebAssembly.

WebAssembly is a virtual machine that is designed to be targeted by system programming language like C/C++ and Rust. It can be used in standalone (a bit like the Java VM) as well as in a web browses. All modern web browser supports and can run WebAssembly code. WebAssembly can be called from JavaScript.

The WebAssembly in the web is unfortunately not as easy to target as the native Windows/Mac/Linux: WebAssembly does not support threading yet, USB access needs to be handled via WebUSB and since we run in a web browser from JavaScript we have to follow some rules inherited from it. The most important being that the program can never block (ie. std::sync::Mutex shall not be used, I have tried ….).

I made two major modification to my existing code in order to make it possible to run in a web browser:

- crazyflie-link and crazyflie-lib have been re-implemented using Rust async/await. This means that there is no thread needed and Rust async/await interfaces almost seamlessly with Javascript’s promises. The link and lib still compile and work well on native platforms.

- I have created a new crate named crazyradio-webusb (not uploaded yet at the release of this post) that exposes the same API as the crazyradio crates but using WebUSB to communicate with the Crazyradio.

To support the web, the relationship between the crates becomes as follow:

The main goal is to keep the crazyflie-lib and crazyflie-link unmodified. Support for the Crazyradio in native and on the web is handled by two crates that exposes the same async API. The crate used is chosen by a compile flag (called Features in the rust world). This architecture could easily be expanded to other platform like Android or iOS.

Status, demo and future work

I have started getting something working end-to-end in the browser. The lib currently only implements Crazyflie Param and the Log TOC so the current demo scans for Crazyflie, connects the first found Crazyflie and prints the list of parameters with the parameters type and values. It can be found on Crazyflie web client test server. This doesn’t do anything useful now, but I am going to update this server when I make progress, so feel free to visit it in the future :).

Note that WebUSB is currently only implemented by Chromium-based browser so Chrome, Chromium and recent Edge. On Windows you need to install the WinUSB driver for the Crazyradio using Zadig. On Linux/Mac/Android it should work out of the box.

The source code for the Web Client is not pushed on Github yet, once it is, it will be named crazyflie-client-web. It is currently mostly implemented in Rust and it will likely mostly be Rust since it is much easier to stick with one (great!) language. One of the plan is to make a javascript API and to push it on NPM, this will then become a Crazyflie lib usable by anyone on the web from JavaScript (or a bit better, TypeScript …).

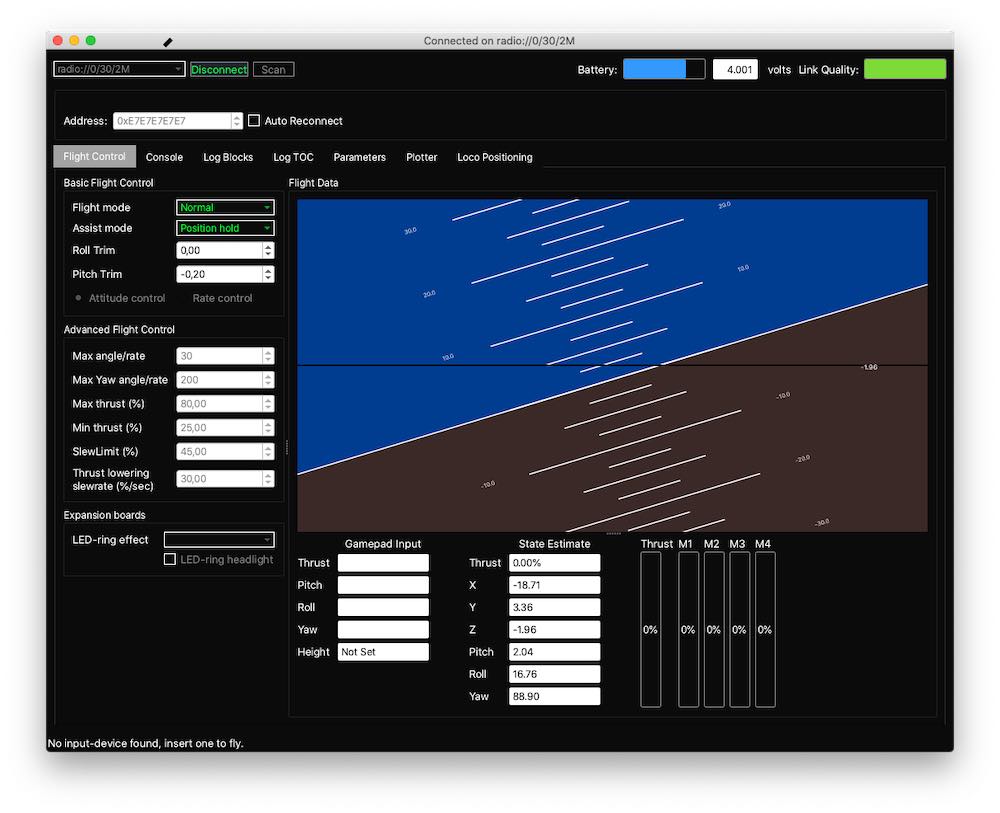

My goal for now is to implement a clone of the Crazyflie Client flight control tab on the web. This would provide a nice way to get started with the Crazyflie without having to install anything.