Hi everyone, here at the Integrated and System Laboratory of the ETH Zürich, we have been working on an exciting project: PULP-DroNet.

Our vision is to enable artificial intelligence-based autonomous navigation on small size flying robots, like the Crazyflie 2.0 (CF) nano-drone.

In this post, we will give you the basic ideas to make the CF able to fly fully autonomously, relying only on onboard computational resources, that means no human operator, no ad-hoc external signals, and no remote base-station!

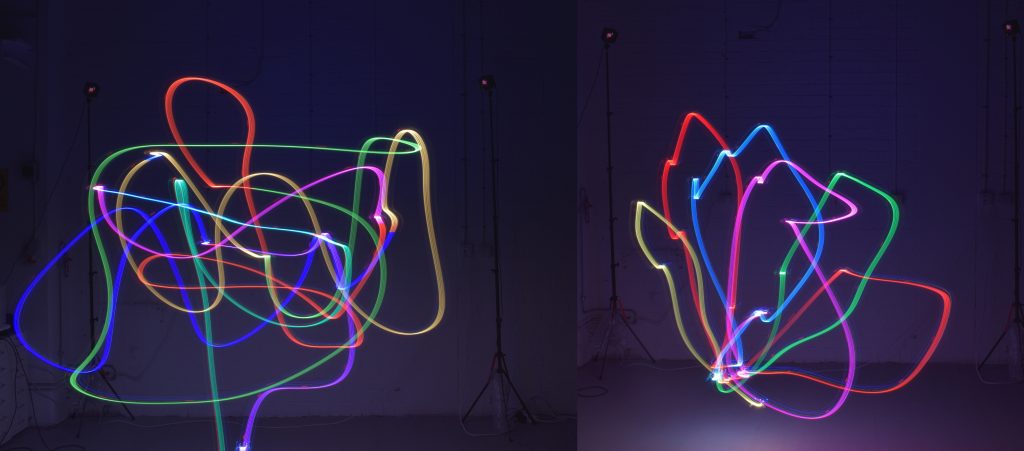

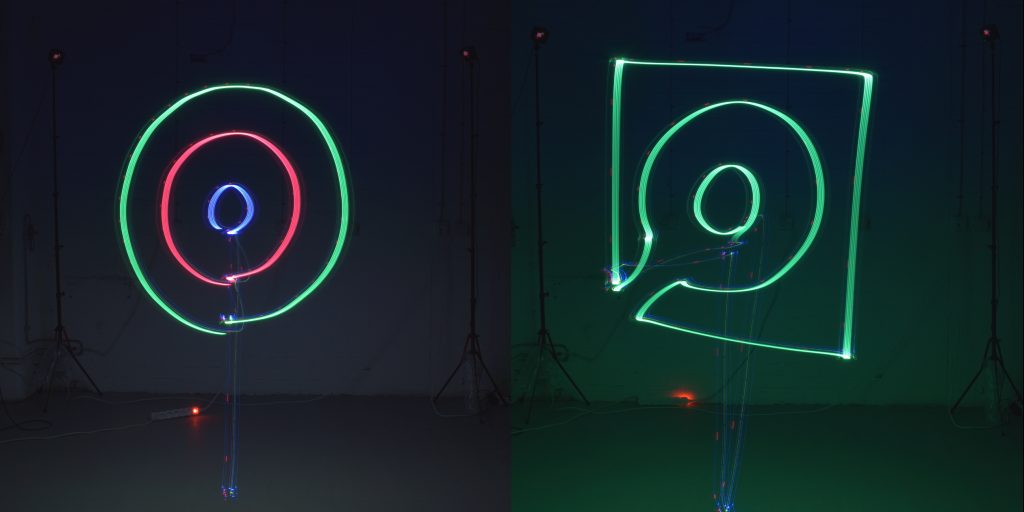

Our prototype can follow a street or a corridor and at the same time avoid collisions with unexpected obstacles even when flying at high speed.

PULP-DroNet is based on the Parallel Ultra Low Power (PULP) project envisioned by the ETH Zürich and the University of Bologna.

In the PULP project, we aim to develop an open-source, scalable hardware and software platform to enable energy-efficient complex computation where the available power envelope is of only a few milliwatts, such as advanced Internet-of-Things nodes, smart sensors — and of course, nano-UAVs. In particular, we address the computational demands of applications that require flexible and advanced processing of data streams generated by sensors such as cameras, which is beyond the capabilities of typical microcontrollers. The PULP project has its roots on the RISC-V instruction set architecture, an innovative academic and research open-source architecture alternative to ARM.

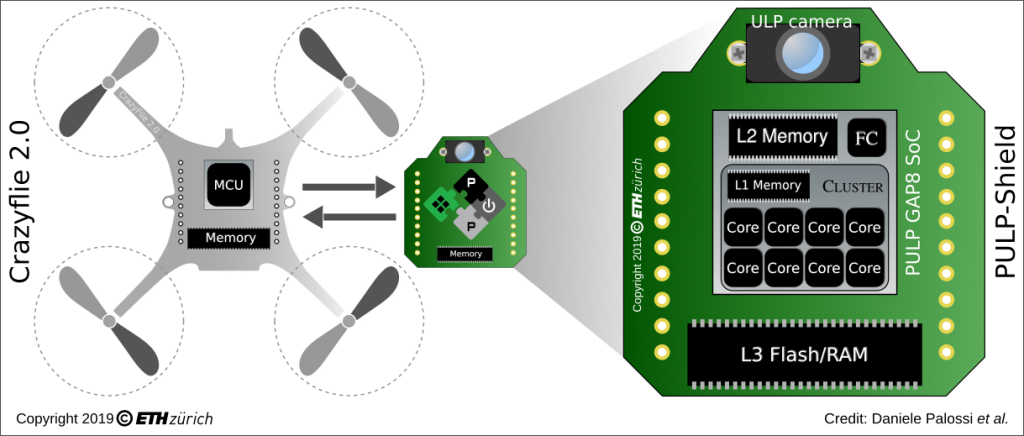

The first step to make the CF autonomous was the design and development of what we called the PULP-Shield, a small form factor pluggable deck for the CF, featuring two off-chip memories (Flash and RAM), a QVGA ultra-low-power grey-scale camera and the PULP GAP8 System-on-Chip (SoC). The GAP8, produced by GreenWaves Technologies, is the first commercially available embodiment of our PULP vision. This SoC features nine general purpose RISC-V-based cores organised in an on-chip microcontroller (1 core, called Fabric Ctrl) and a cluster accelerator of 8 cores, with 64 kB of local L1 memory accessible at high bandwidth from the cluster cores. The SoC also hosts 512kB of L2 memory.

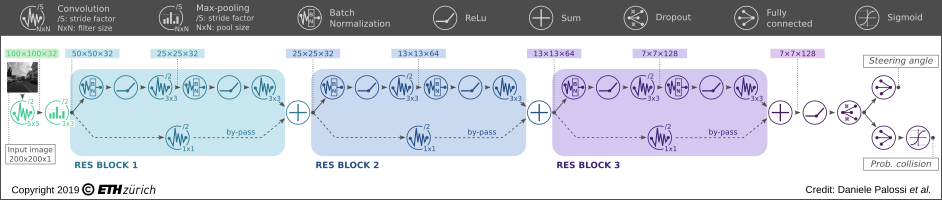

Then, we selected as the algorithmic heart of our autonomous navigation engine an advanced artificial intelligence algorithm based on DroNet, a Convolutional Neural Network (CNN) that was originally developed by our friends at the Robotic and Perception Group (RPG) of the University of Zürich.

To enable the execution of DroNet on our resource-constrained system, we developed a complete methodology to map computationally-intense deep neural networks on the PULP-Shield and the GAP8 SoC.

The network outputs two pieces of information, a probability of collision and a steering angle that are translated in dynamic information used to control the drone: respectively, forward velocity and angular yaw rate. The layout of the network is the following:

Therefore, our mission was to deploy all the required computation onboard our PULP-Shield mounted on the CF, enabling fully autonomous navigation. To put the problem into perspective, in the original work by the RPG, the DroNet CNN enabled autonomous navigation of big-size drones (e.g., the Bebop Parrot). In the original use case, the computational power and memory was not a problem thanks to the streaming of images to a remote base-station, typically a laptop consuming 30-100 Watt or more. So our mission required running a similar workload within 1/1000 of the original power.

To make this work, we combined fixed-point arithmetic (instead of “traditional” floating point), some minimal modification to the original topology, and optimised memory and computation usage. This allowed us to squeeze DroNet in the ultra-small power budget available onboard. Our most energy-efficient configuration delivers 6 frames-per-second (fps) within only 64 mW (including all the electronics on the PULP-Shield), and when we push the PULP platform to its limit, we achieve an impressive 18 fps within just 3.5% of the total CF’s power envelope — the original DroNet was running at 20 fps on an Intel i7.

Do you want to check for yourself? All our hardware and software designs, including our code, schematics, datasets, and trained networks have been released and made available for everyone as open source and open hardware on Github. We look forward to other enthusiasts contributions both in hardware enhancement, as well as software (e.g., smarter networks) to create a great community of people interested in working together on smart nano-drones.

Last but not least, the piece of information you all were waiting. Yes, soon Bitcraze will allow you to enjoy of our PULP-shield, actually, even better, you will play with its evolution! Stay tuned as more information about the “code-name” AI-deck will be released in upcoming posts :-).

If you want to know more about our work:

- preprint arXiv article

- PULP-DroNet release on Github

- PULP Platform home page

- PULP Platform Youtube channel

Questions? Drop us an email (dpalossi at iis.ee.ethz.ch and fconti at iis.ee.ethz.ch)