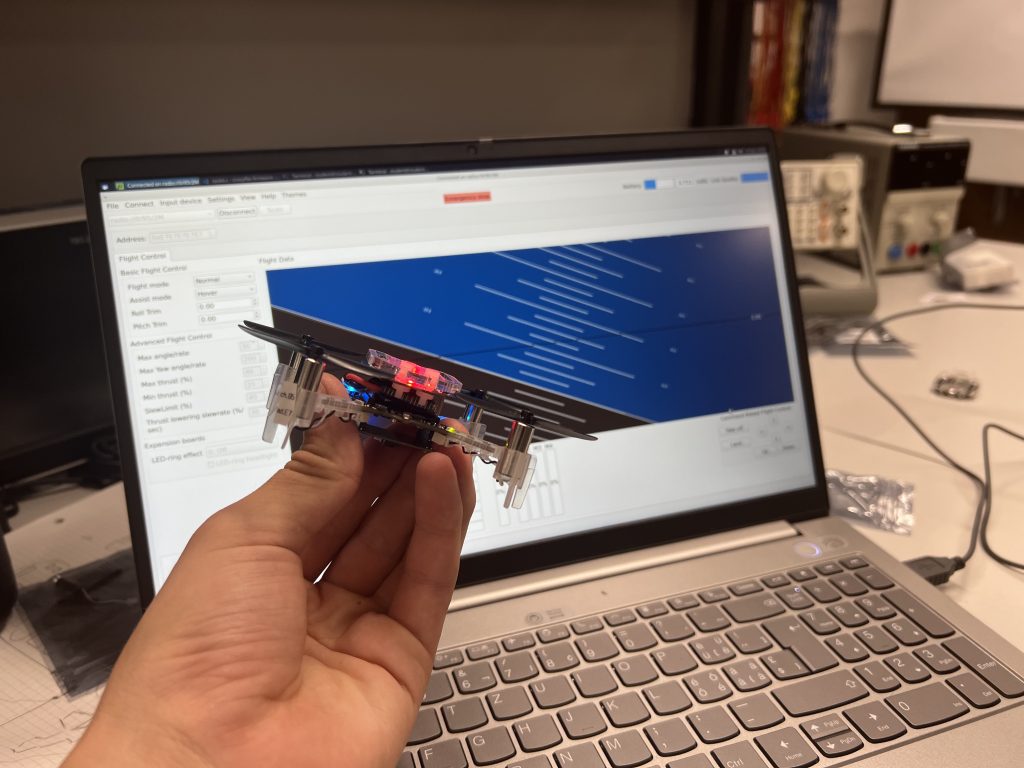

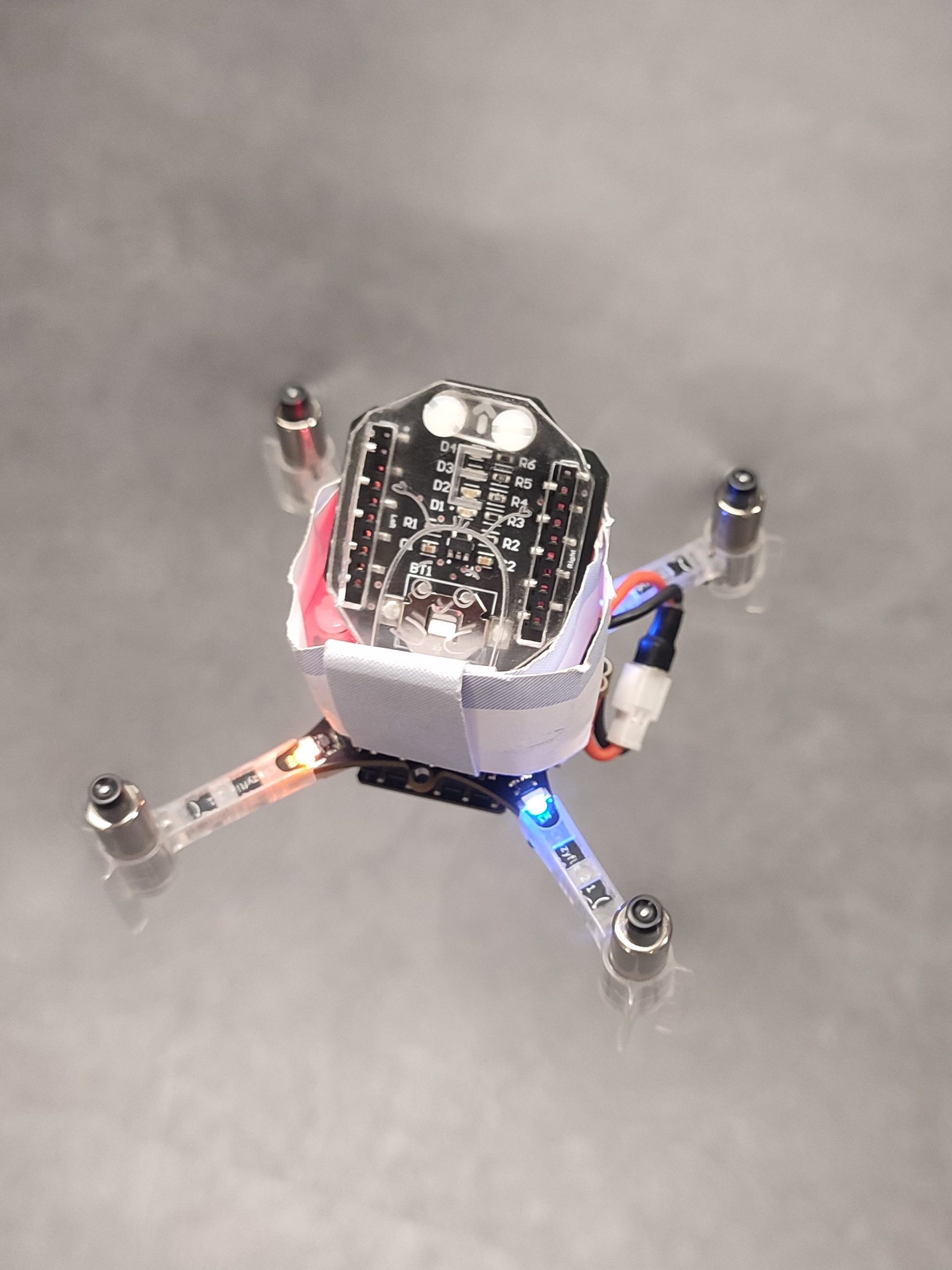

This early summer my research group (Center for Project-Based Learning at ETH Zürich) was in charge of a special week – high school students from all over Switzerland (actually even the world, they had to speak German though) could apply for a study week at different departments from our university. The departments which joined this initiative were mathematics, physics, biology, environmental sciences, material sciences, and our department, electrical engineering and information technologies (ITET). But how do you show teenagers between 15-19 in one week as much as possible from electrical engineering while also having fun? And best inspire them to study at ITET? Our solution was: drones. More specifically, Crazyflies. With those we had many possibilities to learn about electrical engineering – from sensors, microcontrollers, timers, and motors to LEDs, batteries, embedded systems, FreeRTOS tasks, state estimation, and controller – and all this with a high fun potential and a low risk of accidents, as with their weight of only 30g they hardly ever do any damage. In this blog post, I will guide you through our week, in hopes to help others who also want to use the Crazyflie to teach students about electrical engineering in a fun way.

Monday

We started in the afternoon (in the morning they had a welcoming tour) with a short introduction and splitting the 20 students into groups of two (everyone got a paper slip and had to find the matching one, accelerometer gyroscope, pitch roll, UART SPI, and so on – this gives the lecturer a great opportunity for interaction with the students later on, once their word gets relevant during the week). After a short introduction to programming and microcontrollers we moved on to the most classic beginner task: blink an LED! We chose to use the front left one, as this one is only used when communicating – so as long as we don’t connect to the drone we can observe exactly what we programmed. Most students got the LED turned on rather quickly – however, pulsing the LED to change the intensity took them some more time and forced them to learn how to write loops. They also already learned how PWM works without knowing it yet – setting the intensity of an LED or the strength of a motor is about the same thing in the end after all and this gave us a great start for Tuesday.

Tuesday

On Tuesday we looked at hardware from different perspectives. As you might have guessed, we looked at motors and how to control them with PWM and timers. The students were a bit disappointed that we still didn’t fly, but as soon as they realized that they could play their favorite song on the motors the motivation was high again! We didn’t even have any stray drones, even though we let them mount the propellers (the songs sound much better with propellers).

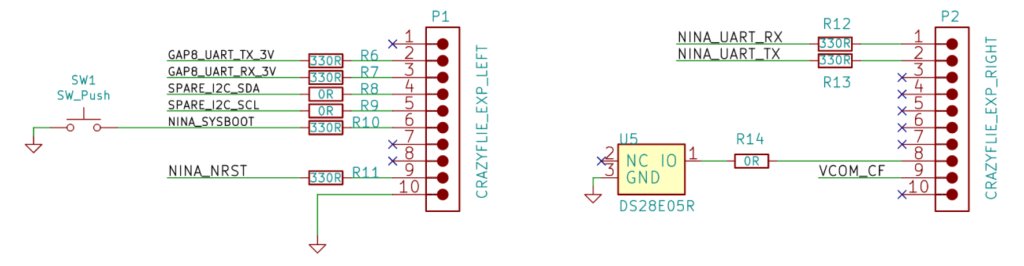

We also looked at another aspect of electrical engineering: PCB design (this was already done though) and soldering. For this, we prepared a custom deck, with four colored LEDs, which could be populated like in industry with solder paste and then soldered with a hot plate. To make it even more fun (and partly to show off our laser cutter) they also designed a small plastic diffuser that could be mounted on top. So in the end our setup resembles the LED ring – however, it can be mounted on top which is essential if you don’t want to fly with a positioning system (and therefore need to mount a flow deck).

Wednesday

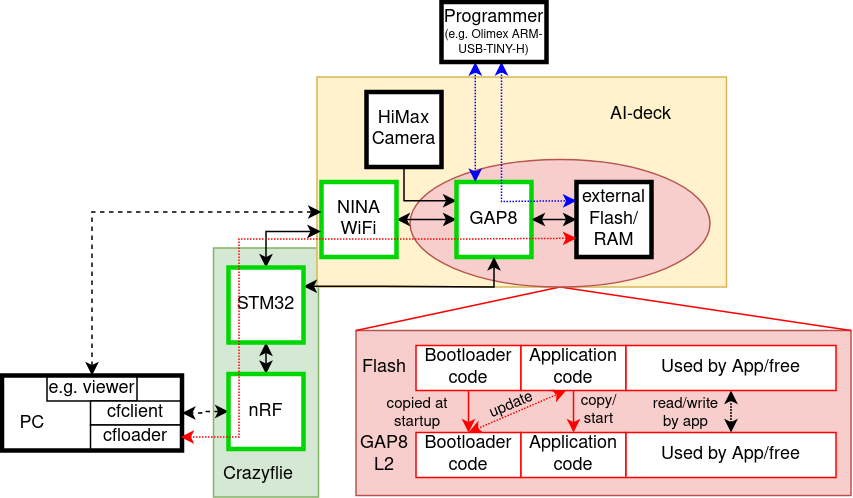

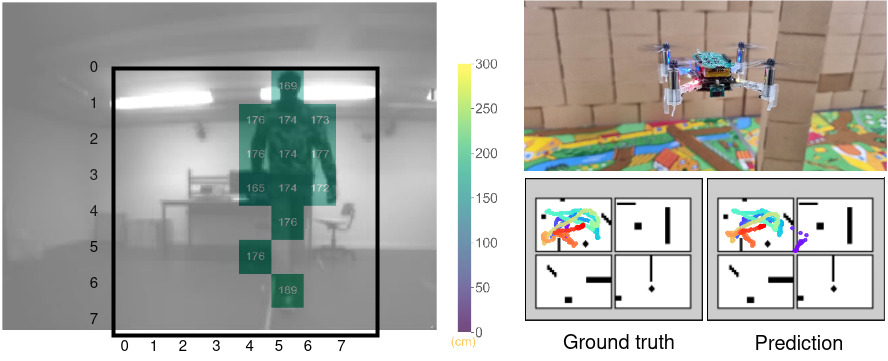

Now that we knew how to blink LEDs and, even more important, how to control motors we had to learn a bit about how the drone actually figures out which motor should be turned on and how much. For this, we first looked at sensors and wired communication protocols, such as I2C (for the IMU and time-of-flight sensor), SPI (for the optical flow), and UART (to the nRF) – due to limited time we didn’t go into details here though. We briefly touched wireless communication, to explain how the commands they will later send to the drone (and the firmware) are actually sent to the right drone.

We moved on to what state estimation is in general – again jumping over all details of an extended Kalman Filter, but had a closer look at the logging and parameter system. We then spent a bit more time on the PID controller again – which was also a bit hard to explain, as half the teenagers hadn’t learned how to integrate and differentiate yet. However, they learned fast and we could move to the part they waited for all week long: Flying (and tuning the PID).

Thursday

This was the day that was meant for creativity – the students could choose themselves which project they want to achieve. We proposed them some ideas, such as blinking LEDs depending on the height, flying through a gate (the challenge here is to filter the height measurements when the gate border is below the drone), steering the drone with the keyboard, soldering an own sensor on a break-out board, …

In the end, we saw many cool projects, from a song played as a canon on multiple motors to a transporter drone, flying successfully through the gate, doing a successful looping (unfortunately no successful landing yet…), racing against each other (possibly with disco-lights on the deck) and trials for reaching max speed in the hallway.

The most popular base project was to steer the drone with the keyboard – unfortunately (or fortunately? they sure had fun with it once it was running and they might have learned enough in the remaining week…), this was very easy after we showed them where Marcus’ script lives (here) and which 8 lines (170-177) they have to remove for it to work without an AI-deck (and don’t forget to adapt the URI)…

Friday

On Friday it was presentation day – in the morning they could still work on their projects, but in the afternoon all the 120 students (and most of their parents) came together in a huge lecture hall to present what they did during this week. And, as at a real conference, they had posters and their drones (which we, unfortunately, were not allowed to fly without a fireproof net… Will organize this next time) to show their projects to family, friends, and even random tourists (the entrance hall of the ETH main building is on many sightseeing tours).

At the end of the week I doubted the robustness of Crazyflies for a moment – however, Monday morning once I had peace and quiet once again I figured out what was wrong with all hardware which ended up on the “not working for unknown reasons” stack in less than an hour (and fixed almost all of it). Notes to all others and my future self for the next time I give 10 drones to 20 teenagers:

- If you show them how to tune a PID, also explain that “persistent” means exactly what it says – if you mess up the PID values and persist them they will stay this way until you reset them, no matter how often you reflash the drone.

- Explain how fragile connectors are and that you NEVER should pull at cables. Also, mention 10x more to be careful when plugging in decks. And radios.

- Keep one “private” drone no one is allowed to mess with – it will help greatly to figure out if they only broke the flow deck connectors or something more serious (which actually never happened)

- Doing only warm boots with setting individual addresses with the CLOAD_CMDS while flashing saved us a lot of trouble, randomly connecting to drones only happened once they discovered the app for the phone…

The coding tasks (and at least some minimal solutions) can be found on my fork: Tasks and solutions. They are kept short on purpose – we at the Center for Project-Based Learning believe in our name – we believe the most learning (and fun) happens when you rather freely explore what you can do with the basic tools you just learned.

P.S. For completeness – I cut out all the parts which really had nothing to do with Crazyflies, we also did lab tours in the high-voltage laboratory and the laboratory for optical communication – and of course had some social events with actual university students. As much as we like the Crazyflie, even we have to admit that the field of electrical engineering is even bigger than what we can show with those tiny drones ;)