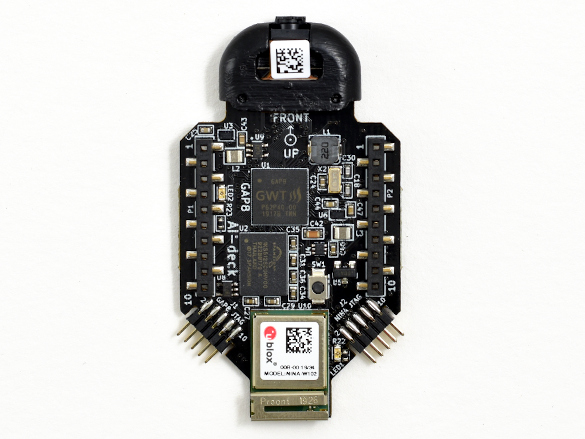

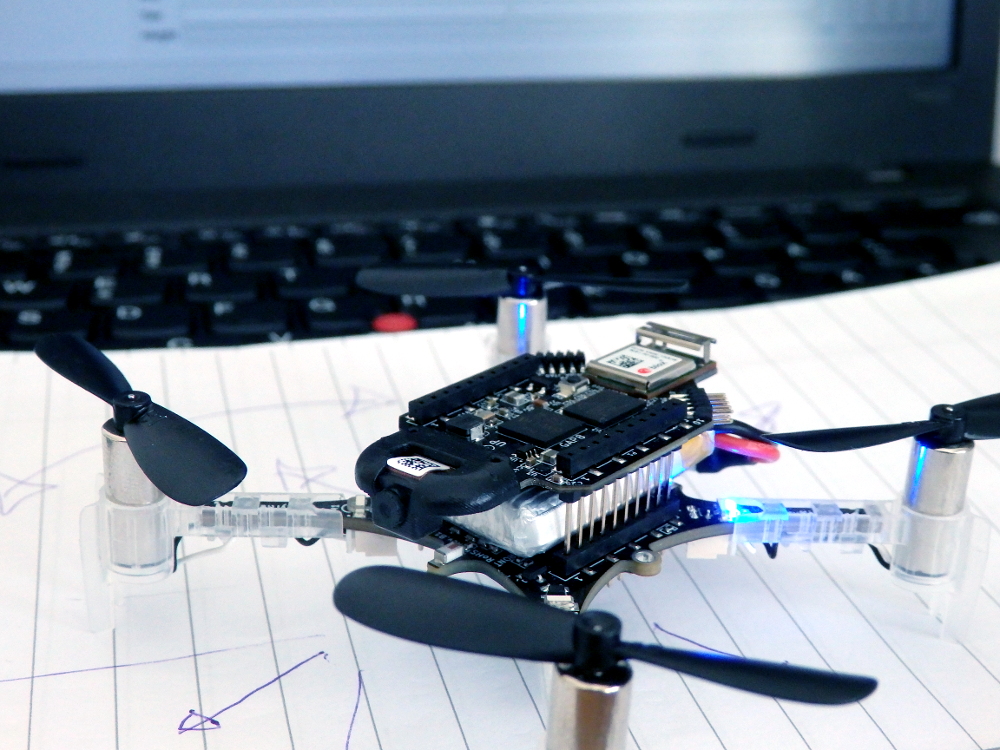

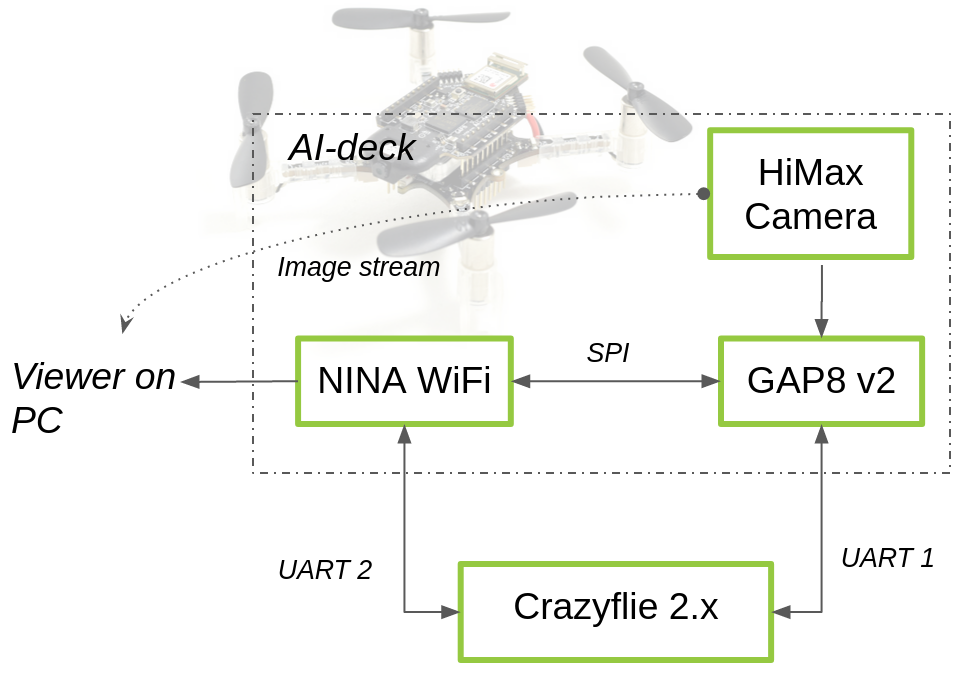

The AI-deck is now available in our online store! Super-edge-computing is now possible on your Crazyflie thanks to the GAP8 IoT application processor from GreenWaves Technologies. GAP8 delivers over 10 GOPS of compute power at exceptionally low power consumption enabling complex tasks such as path-finding and target following on the Crazyflie, consuming less than 0,1% of the total energy.

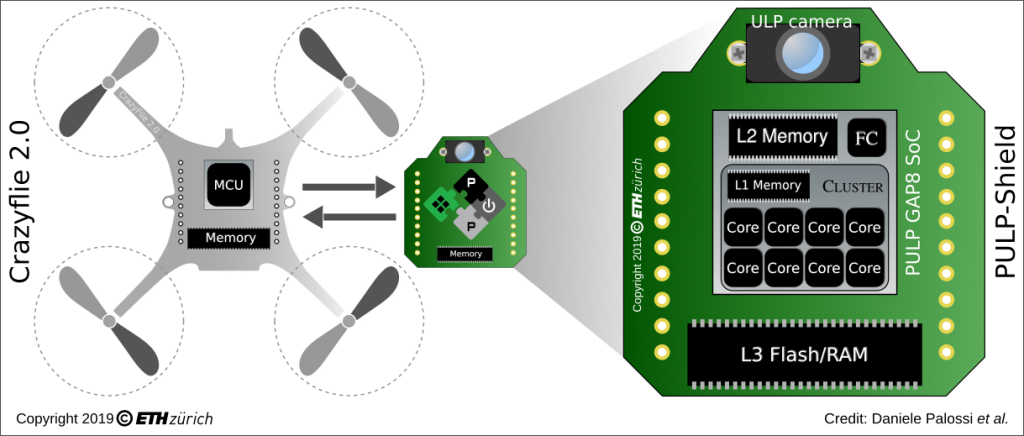

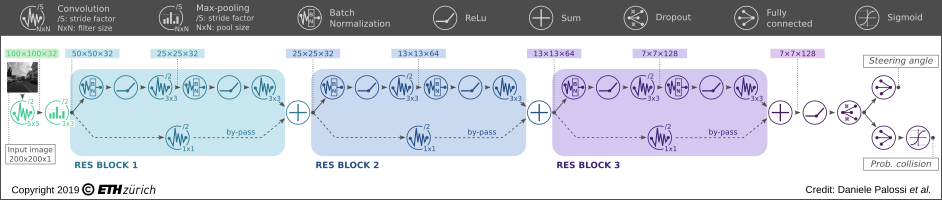

The AI-deck can host artificial intelligence-based workloads like Convolutional Neural Networks onboard. This will open up many research areas focusing on fully onboard autonomous navigation of tiny MAVs, like ETH Zurich’s PULP-Dronet. Moreover, there is also ESP32 WiFi connectivity with the possibility to stream the images to your personal computer.

We are happy that we managed to get everything ready so soon after our last update. Crazyflie AI-deck is in early access, which means the hardware design is now finalized and full support for building, running and debugging applications (including GreenWaves’ GAPflow tools for porting neural networks to GAP from TensorFlow) is available, however, limited examples of specific AI-deck applications have been developed so far. Read more about early access here. Even though there is still some work to be done, there are already some examples you can try out which we will explain in this blog post. Also, we aim to have all AI-decks pre-flashed with the WiFi streamer example so that you check out right away if your AI-deck is working.

Beware that you need an JTAG-enabled programmer/debugger in order to develop for the AI-deck!

Technical specifications

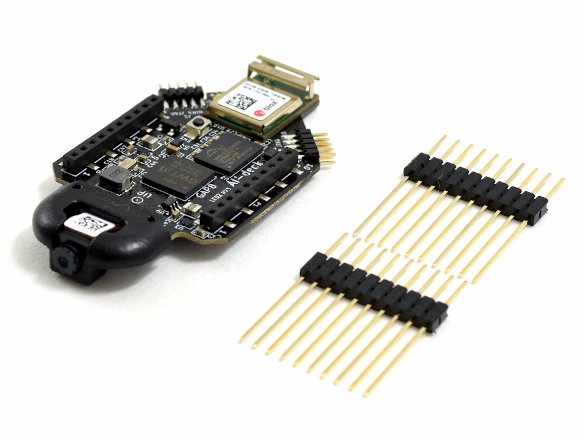

The AI-deck will come with two elongated male pin-headers, which enables the user to connect it to the Crazyflie with an additional deck. There are two 10 pin JTAG connectors soldered which enables connection with a JTAG-enabled programmer. This will be the main way to program the GAP8 chip and the ESP-based NINA module while it is still in early access.

Getting started

When you first receive your AI-deck, it should be flashed with a WiFi streamer example of the camera image stream. Once the AI-deck is powered up by the Crazyflie, it will automatically create a hotspot called ‘Bitcraze AI-deck Example’. In this repo in the folder named ‘NINA’ you will find a file called viewer.py. If you run this with python (preferably version 3), you will be able to see the camera image stream on your computer. This will confirm that your AI-deck is working.

Next step is to go to the docs folder of the AI-deck examples repository. Try out the WiFi demo and set up your development program with the getting-started guide. This guide contains links to the GAP-SDK documentation from GreenWaves Technologies. You can read more about the face detector example that we demonstrated in this blog post.