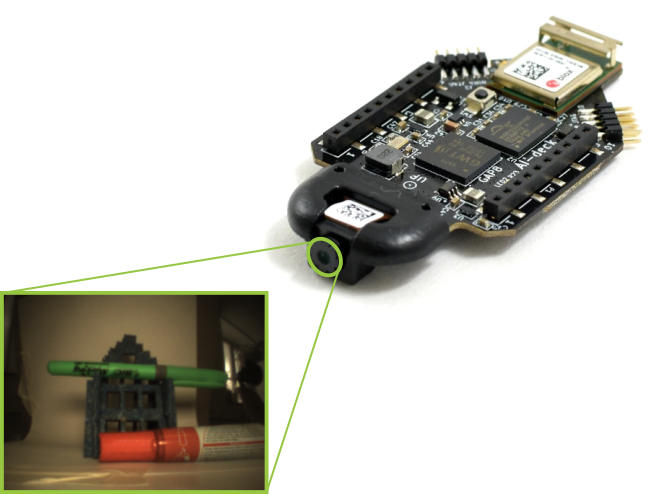

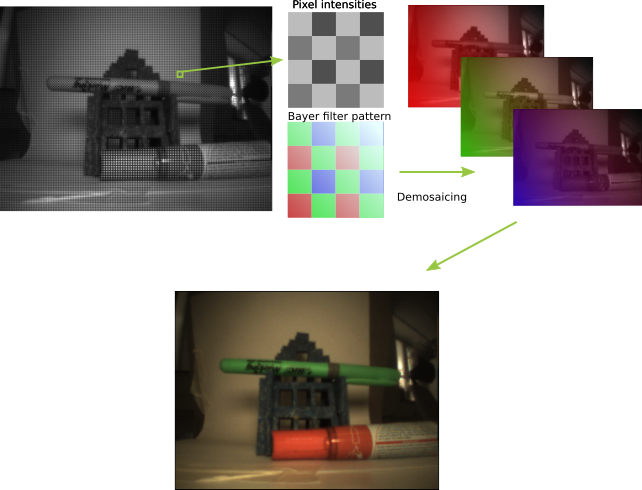

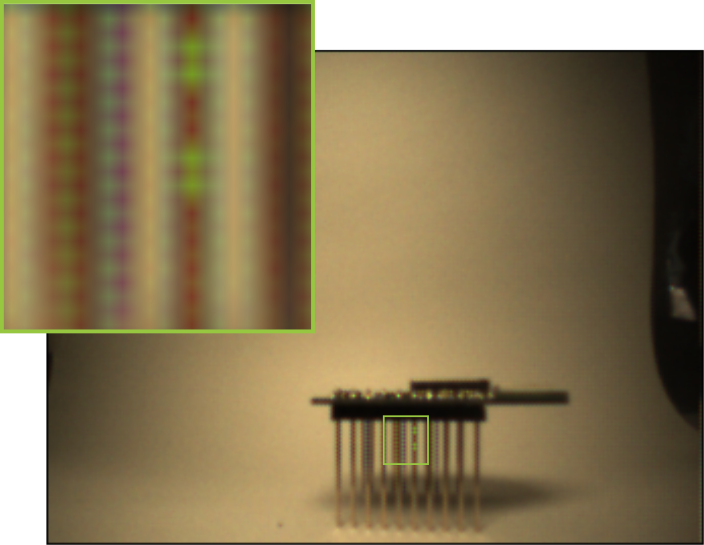

During the last couple of months we’ve been working on getting the AI-deck out of early-access. One of the things needed for this to happen is an improved infrastructure for the AI-deck, like bootloading and how the deck fits into the rest of the eco-system. For this there’s two new repositories:

CPX (Crazyflie Packet eXchange)

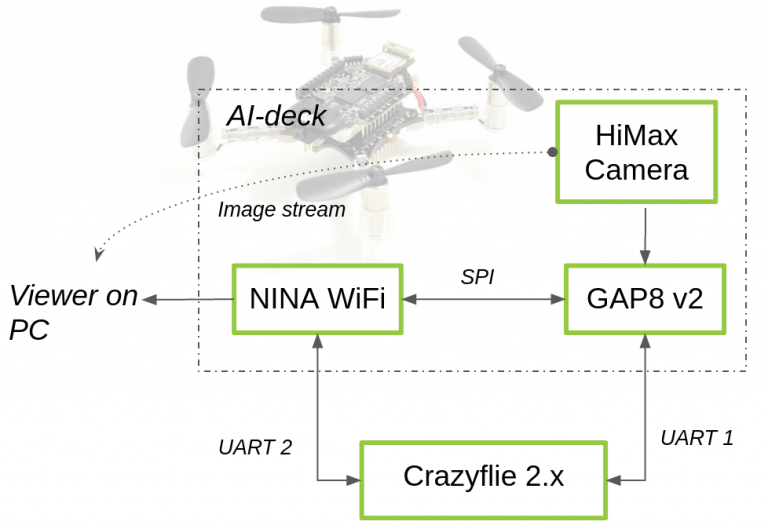

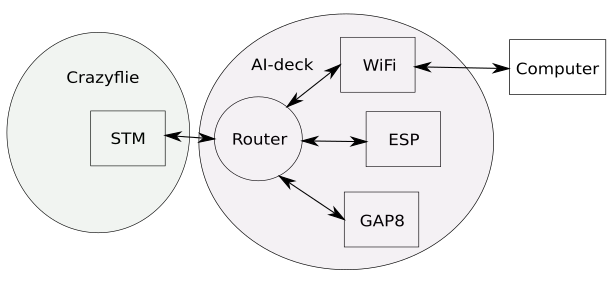

With the addition of two new MCUs (ESP32 and GAP8) as well as the possibility to connect via the WiFi, we quickly run into the issue of how to communicate between the targets. Even more so since there’s no direct access between some of these (like the Crazyflie<->WiFi, Crazyflie<->GAP8, GAP8<->WiFi).

What we needed was a protocol that …

- … could be routed though intermediaries to reach it’s destination

- … could handle high transfer rates with large amounts of data as well as small messages

- … could handle different memory budgets

- … doesn’t drop data along the way if some parts of the system is loaded

We decided to design and implement a new protocol, which we’ve named CPX (Crazyflie Packet eXchange). The protocol solves the issues above by:

- Each packet has a source and destination ID, so it can be routed to (and from) the target of the packet

- Each link between targets can have it’s own MTU, which allows each target to optimize memory usage. In order to handle this, intermediaries are allowed to split packages along the way, so data can be transferred in smaller pieces.

- Instead of dropping packages if targets become overloaded, congestion in created in the system, where the sender will not be able to send more data until the receiver has been able to handle it.

Currently the new protocol is used for the GAP8 bootloader, for setting up the WiFi on the ESP32 and in the WiFi streamer example. But we’re hoping to expand it in the future to include more functionality, like logging and other plumbing that could be used in user applications.

WiFi configuration changes

With CPX it’s now possible to set up the WiFi from either the Crazyflie, the GAP8 or from the ESP32 itself. For doing this from the Crazyflie we’ve added the option of configuring this using KConfig, where we’ve added the following options in the expansion decks menu for the AI-deck:

- Do not set-up the WiFi: Should be used if another target is setting up the WiFi, like the GAP8

- Act as an access point: This will make it possible to connect to the AI-deck as an access point

- Connect to an access point: This will connect the AI-deck to an access point using SSID/PASSWD entered in the menuconfig

GAP 8 bootloader

To make things easier for the user we want to remove the requirement of using a JTAG dongle to program the GAP8. In order to achieve this we’ve implemented a bootloader for the GAP8 which uses CPX, which means it can be used either from the Crazyflie or over the WiFi. We still haven’t had time to implement the Crazyflie part, where this will fit nicely together with the cload and client deck firmware upgrade, but it’s currently working via the WiFi. So until the implementation is done via the Crazyflie Python library, this script can be used to bootload and start your custom GAP8 firmware. Note though, that you will first have to flash the GAP8 bootloader and set-up the WiFi.

What’s next?

We’re continuing working towards getting the AI-deck out of early access. For CPX and the GAP8 bootloader there’s still a few bugs to iron out and examples to be updated as well as improved support for building using our toolbelt.