With spring just around the corner, we thought it was the perfect excuse to make our Crazyflies bloom. The result is a small swarm demo where each drone flies a 3D flower trajectory, all coordinated from a single Crazyradio 2.0. This blog post walks through how it works and highlights two things that made it possible: the new Color LED deck and the Crazyflie Rust library.

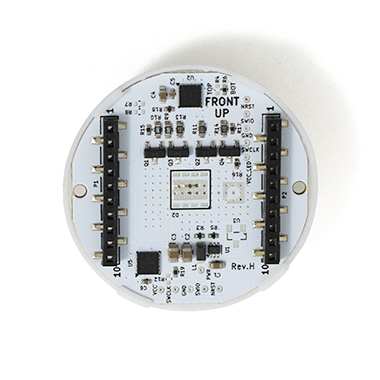

The Color LED deck

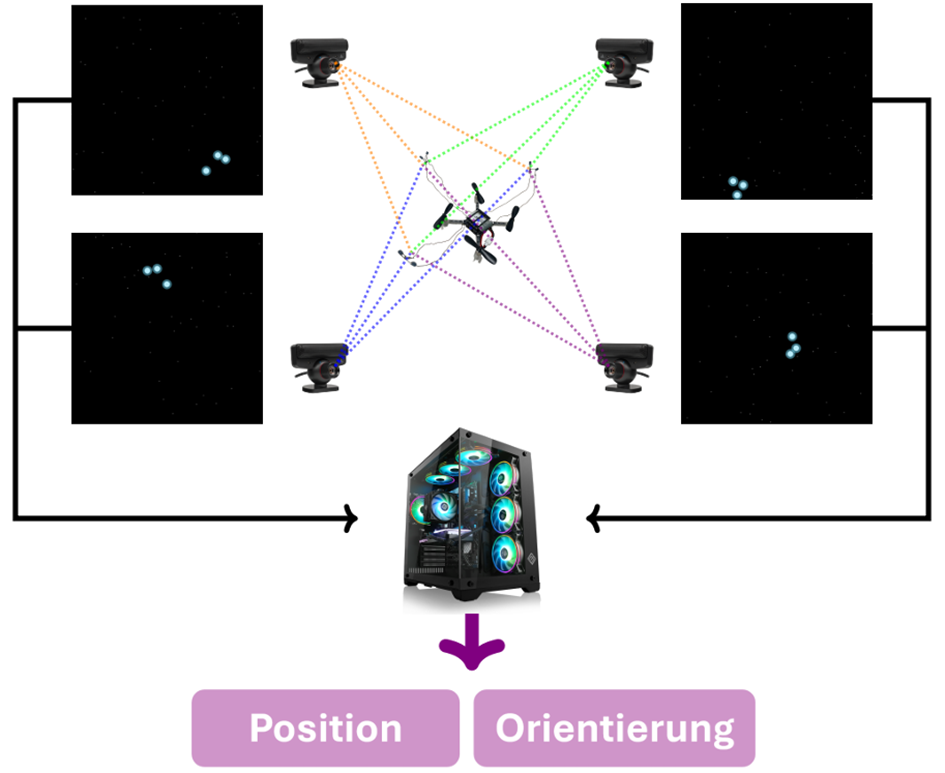

There are two Color LED decks for the Crazyflie – one mounted on top and one on the bottom – each with its own individually controllable LED via the standard parameter interface. In this demo we use the one mounted on the bottom to give color to the flowers, along with the Lighthouse deck for accurate positioning in space.

The deck opens up a lot of creative possibilities for swarm demos as well as clear visual feedback about what each drone is doing.

Fast swarm connections with the Crazyflie Rust library

Getting five drones connected quickly on a single Crazyradio used to be a real bottleneck. A big part of the connection time is downloading the Table of Contents (TOC) – the map of all parameters and log variables the drone exposes. The Crazyflie Rust library introduces a lazy-loading parameter system. Parameter values are not downloaded at connect time; instead, they are fetched only if the API user explicitly accesses them.

Additionally, the TocCache feature makes it trivial to persist the TOC locally and reuse it on every subsequent connection. In practice this means that after connecting to each drone once, all future connections are nearly instantaneous. The cache is keyed by the TOC’s CRC32 checksum, so it automatically stays valid as long as the firmware doesn’t change, and it’s identical between drones with the same checksum.

The library also uses Tokio’s async runtime, which means all Crazyflie connections start at the same time without waiting for each other. Combined with generally higher communication performance in the Rust implementation, these features significantly reduce the startup overhead, making the swarm feel reliable and responsive, which would require much more effort with the current Python library.

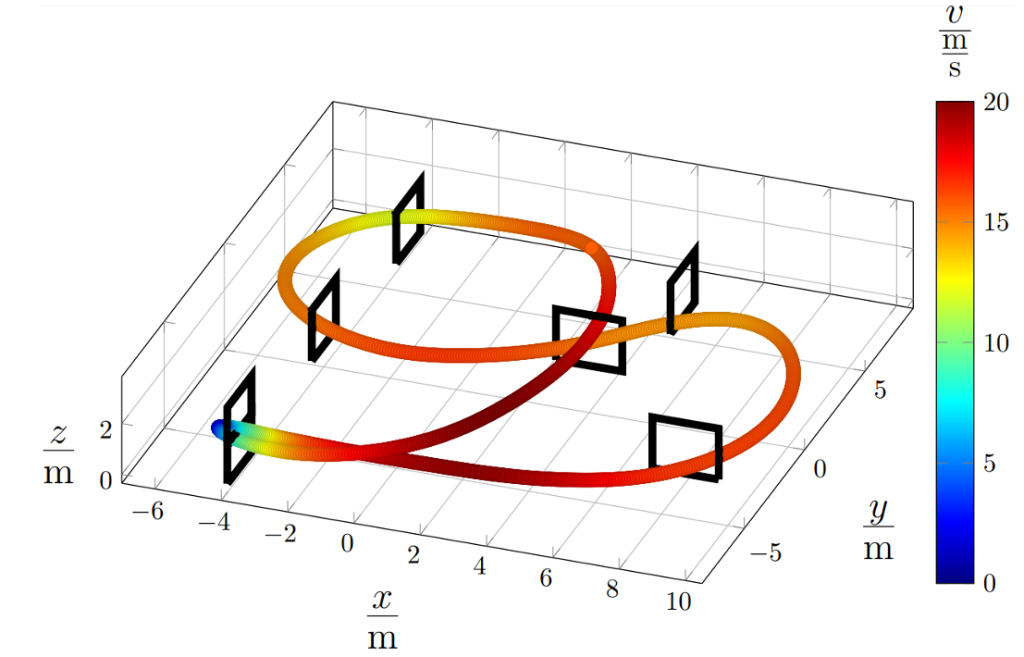

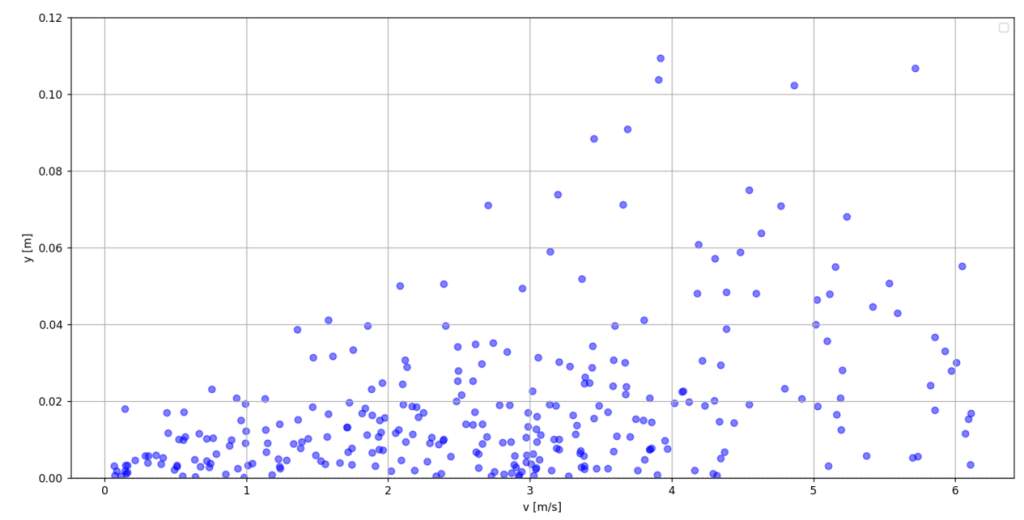

Generating the trajectories

The flower shapes are generated in Python using this script. It produces two .json files per drone (one stem{n} and one petals{n}) containing all the waypoints to fly through. The trajectories are then uploaded to the drone as compressed poly4d segments, a compact format that the Crazyflie’s onboard high-level commander can execute autonomously. Both trajectories are expressed relative to each drone’s initial position, so the formation geometry is entirely determined by where you place the drones on the ground before takeoff.

Putting them all together

The flight sequence is pretty straightforward:

1. Build the trajectories as waypoints on the host.

2. Connect to all drones simultaneously.

3. Upload each drone’s compressed trajectories in parallel.

4. Fly the trajectories while switching the LED colors.

Everything after the connection is driven by Tokio’s join_all, so the swarm stays in sync without any explicit synchronization logic – the drones are just given the same commands at the same time.

The full source code is available at this repository (Python for trajectory generation, Rust for flying).

We’re excited about where the Rust library is heading. It’s improving the communication with the Crazyflie and allows us to increase dramatically the number of Crazyflies per Crazyradio, leading to bigger and more reliable swarms. If you build something cool with it, let us know!